13.1 Decision-Making

Cognitive Psychology

Representativeness

Humans pay particular attention to stimuli that are salient—things that are unique, negative, colorful, bright, & moving. In many cases, we base our judgments on information that seems to represent, or match, what we expect will happen. When we do so, we are using the representativeness heuristic.

Cognitive accessibility refers to the extent to which knowledge is activated in memory & thus likely to be used to guide our reactions to others. The tendency to overuse accessible social constructs can lead to errors in judgment, such as the availability heuristic & the false consensus bias. Counterfactual thinking about what might have happened & the tendency to anchor on an initial construct & not adjust sufficiently from it are also influenced by cognitive accessibility.

You can use your understanding of social cognition to better understand how you think accurately—but also sometimes inaccurately—about yourself & others.

Although which characteristics we use to think about objects or people is determined in part by the salience of their characteristics (our perceptions are influenced by our social situation), individual differences in the person who is doing the judging are also important (our perceptions are influenced by person variables). People vary in the schemas that they find important to use when judging others & when thinking about themselves. One way to consider this importance is in terms of the cognitive accessibility of the schema. Cognitive accessibility refers to the extent to which a schema is activated in memory & thus likely to be used in information processing.

You probably know people who are golf nuts (or maybe tennis or some other sport nuts). All they can talk about is golf. For them, we would say that golf is a highly accessible construct. Because they love golf, it is important to their self-concept; they set many of their goals in terms of the sport, & they tend to think about things & people in terms of it (“if he plays golf, he must be a good person!”). Other people have highly accessible schemas about eating healthy food, exercising, environmental issues, or really good coffee, for instance. In short, when a schema is accessible, we are likely to use it to make judgments of ourselves & others.

Although accessibility can be considered a person variable (a given idea is more highly accessible for some people than for others), accessibility can also be influenced by situational factors. When we have recently or frequently thought about a given topic, that topic becomes more accessible & is likely to influence our judgments. This is in fact the explanation for the results of the priming study you read about earlier—people walked slower because the concept of elderly had been primed & thus was currently highly accessible for them.

Because we rely so heavily on our schemas & attitudes—and particularly on those that are salient & accessible—we can sometimes be overly influenced by them. Imagine, for instance, that I asked you to close your eyes & determine whether there are more words in the English language that begin with the letter R or that have the letter R as the third letter. You would probably try to solve this problem by thinking of words that have each of the characteristics. It turns out that most people think there are more words that begin with R, even though there are in fact more words that have R as the third letter.

You can see that this error can occur as a result of cognitive accessibility. To answer the question, we naturally try to think of all the words that we know that begin with R & that have R in the third position. The problem is that when we do that, it is much easier to retrieve the former than the latter, because we store words by their first, not by their third, letter. We may also think that our friends are nice people because we see them primarily when they are around us (their friends). & the traffic might seem worse in our own neighborhood than we think it is in other places, in part because nearby traffic jams are more accessible for us than are traffic jams that occur somewhere else. And do you think it is more likely that you will be killed in a plane crash or in a car crash? Many people fear the former, even though the latter is much more likely: Your chances of being involved in an aircraft accident are about 1 in 11 million, whereas your chances of being killed in an automobile accident are 1 in 5,000—over 50,000 people are killed on U.S. highways every year.

In this case, the problem is that plane crashes, which are highly salient, are more easily retrieved from our memory than are car crashes, which are less extreme.

The tendency to make judgments of the frequency of an event, or the likelihood that an event will occur, on the basis of the ease with which the event can be retrieved from memory is known as the availability heuristic (Schwarz & Vaughn, 2002; Tversky & Kahneman, 1973).

The idea is that things that are highly accessible (in this case, the term availability is used) come to mind easily & thus may overly influence our judgments. Thus, despite the clear facts, it may be easier to think of plane crashes than of car crashes because the former are so highly salient. If so, the availability heuristic can lead to errors in judgments.

Still another way that the cognitive accessibility of constructs can influence information processing is through their effects on processing fluency. Processing fluency refers to the ease with which we can process information in our environments. When stimuli are highly accessible, they can be quickly attended to & processed, & they therefore have a large influence on our perceptions. This influence is due, in part, to the fact that our body reacts positively to information that we can process quickly, & we use this positive response as a basis of judgment (Reber, Winkielman, & Schwarz, 1998; Winkielman & Cacioppo, 2001).

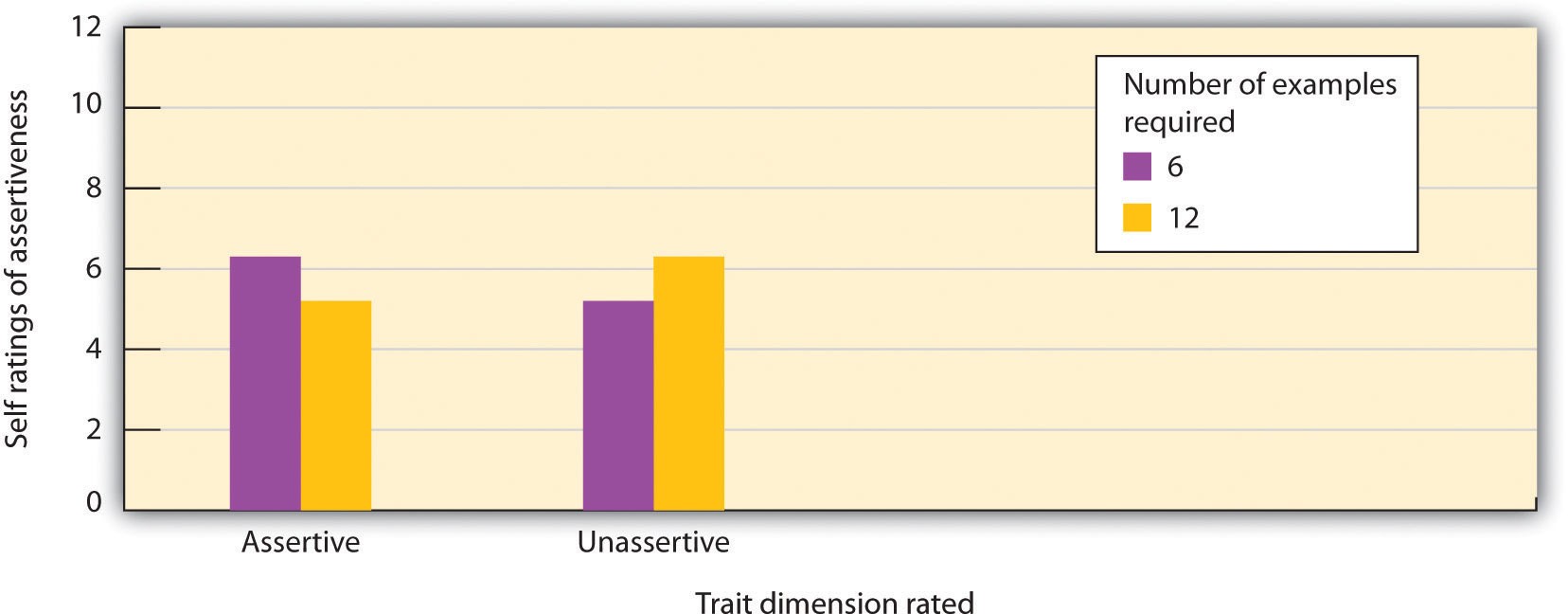

In one study demonstrating this effect, Norbert Schwarz & his colleagues (Schwarz et al., 1991) asked one set of college students to list 6 occasions when they had acted either assertively or unassertively & asked another set of college students to list 12 such examples. Schwarz determined that for most students, it was pretty easy to list 6 examples but pretty hard to list 12.

The researchers then asked the participants to indicate how assertive or unassertive they actually were. You can see from Figure 1 “Processing Fluency” that the ease of processing influenced judgments. The participants who had an easy time listing examples of their behavior (because they only had to list 6 instances) judged that they did in fact have the characteristics they were asked about (either assertive or unassertive), in comparison with the participants who had a harder time doing the task (because they had to list 12 instances). Other research has found similar effects—people rate that they ride their bicycles more often after they have been asked to recall only a few rather than many instances of doing so (Aarts & Dijksterhuis, 1999), & they hold an attitude with more confidence after being asked to generate few rather than many arguments that support it (Haddock, Rothman, Reber, & Schwarz, 1999).

Figure 1. Processing Fluency

When it was relatively easy to complete the questionnaire (only 6 examples were required), the student participants rated that they had more of the trait than when the task was more difficult (12 answers were required). Data are from Schwarz et al. (1991).

We are likely to use this type of quick & “intuitive” processing, based on our feelings about how easy it is to complete a task, when we don’t have much time or energy for more in-depth processing, such as when we are under time pressure, tired, or unwilling to process the stimulus in sufficient detail. Of course, it is very adaptive to respond to stimuli quickly (Sloman, 2002; Stanovich & West, 2002; Winkielman, Schwarz, & Nowak, 2002), & it is not impossible that in at least some cases, we are better off making decisions based on our initial responses than on a more thoughtful cognitive analysis (Loewenstein, weber, Hsee, & Welch, 2001). For instance, Dijksterhuis, Bos, Nordgren, & van Baaren (2006) found that when participants were given tasks requiring decisions that were very difficult to make on the basis of a cognitive analysis of the problem, they made better decisions when they didn’t try to analyze the details carefully but simply relied on their unconscious intuition.

In sum, people are influenced not only by the information they get but by how they get it. We are more highly influenced by things that are salient & accessible & thus easily attended to, remembered, & processed. On the other hand, information that is harder to access from memory, is less likely to be attended to, or takes more effort to consider is less likely to be used in our judgments, even if this information is statistically equally informative or even more informative.

How tall do you think Mt. Everest is? (Don’t Google it—that kind of defeats the purpose) You probably don’t know the exact number, but do you think it’s taller or shorter than 150 feet? Assuming you said “taller”[1], make a guess. How tall do you think it is?

Figure 2. Mt. Everest

Mt. Everest is roughly 29,000 ft. in height.

How’d you do? If I were to guess, based on the psychology I’m about to share with you, you probably undershot it. Even if you didn’t, most people would.[3] The reason is what’s called the anchoring heuristic.

Back in the 1970s, Amos Tversky & Daniel Kahneman identified a few reliable mental shortcuts people use when they have to make judgments. Oh, you thought people were completely rational every time they make a decision? It’s nice to think, but it’s not always what happens. We’re busy! We have lives! I can’t sit around & do the math anytime I want to know how far away a stop sign is, so I make estimates based on pretty reliable rules of thumb. The tricks we use to do that are called heuristics.

The Basics of the Anchoring Heuristic

The basic idea of anchoring is that when we’re making a numerical estimate, we’re often biased by the number we start at. In the case of the Mt. Everest estimate, I gave you the starting point of 150 feet. You thought “Well, it’s taller than that,” so you likely adjusted the estimate from 150 feet to something taller than that. The tricky thing, though, is that we don’t often adjust far enough away from the anchor.

Let’s jump into an alternate timeline and think about how things could have gone differently. Instead of starting you at 150 feet, this time I ask you whether Mt. Everest is taller or shorter than 300,000 feet. This time you’d probably end up at a final estimate that’s bigger than the correct answer. The reason is you’d start at 300,000 & start adjusting down, but you’d probably stop before you got all the way down to the right answer.

Coming Up With Your Own Anchors

In general, this is a strategy that tends to work for people. After all, when we don’t know an exact number, how are we supposed to figure it out? It seems pretty reasonable to start with a concrete anchor & go from there.

In fact, some research has shown that this is how people make these estimates when left to their own devices. Rather than work from an anchor that’s given to them (like in the Mt.

Everest example), people will make their own anchor—a “self-generated anchor.”

For example, if you ask someone how many days it takes Mercury to orbit the sun, she’ll likely to start at 365 (the number of days it takes Earth to do so) & then adjust downward. But of course, people usually don’t adjust far enough.

Biased By Completely Arbitrary Starting Points

Figure 3. A game of Roulette

This paints an interesting picture of how we strive to be reasonable by adopting a pretty decent strategy for coming up with numerical estimates. When you think about, even though we’re biased by the starting point, it sounds like a decent strategy. After all, you’ve got to start somewhere!

But what if the starting point is totally arbitrary? Sure, the “150 feet” anchor from before probably seems pretty arbitrary, but at the time you might have thought “Why would he have started me at 150 feet? It must be a meaningful starting point.”

The truth is that these anchors bias judgments even when everyone realizes how arbitrary they are. To test this idea, one study asked people to guess the percentage of African countries in the United Nations. To generate a starting point, though, the researchers spun a “Wheel-of- Fortune” type of wheel with numbers between 0 – 100.

For whichever number the wheel landed on, people said whether they thought the real answer was more or less than that number. Even these random anchors ended up biasing people’s estimates. If the wheel had landed on 10, people tended to say about 25% of countries in the UN are African, but if the wheel had landed on 65, they tended to say about 45% of countries in the UN are African. That’s a pretty big difference in estimates, & it comes from a random change in a completely arbitrary value.

People’s judgments can even be biased by anchors based on their own social security numbers.

Through all of these examples, the anchor has been a key part of the judgment process. That is, someone says “Is it higher or lower than this anchor?” & then you make a judgment. But what if the starting point is in the periphery?

Even when some irrelevant number is just hanging out in the environment somewhere, it can still bias your judgments! These have been termed “incidental anchors.”

Figure 4. Restaurant seating

For example, participants in one study were given a description of a restaurant & asked to report how much money they would be willing to spend there. Two groups of people made this judgment, & the only difference between them is that for one group, the restaurant’s name was “Studio 17” & for the other group, the restaurant’s name was “Studio 97.” When the restaurant was “Studio 97,” people said they’d spend more money (an average of about $32) than when the restaurant was “Studio 17” (where they reported a willingness to spend about

$24).

Other research has shown that people were willing to pay more money for a CD when a totally separate vendor was selling $80 sweatshirts, compared to when that other vendor was selling $10 sweatshirts.

In both of these examples, the anchor was completely irrelevant to the number judgments, & people weren’t even necessarily focused on the anchor. Even still, just having a number in the environment could bias people’s final judgments.

Raising the Anchor & Saying “Ahoy”

Across all of these studies, a consistent pattern emerges: even arbitrary starting points end up biasing numerical judgments. Whether we’re judging prices, heights, ages, or percentages, the number we start at keeps us from reaching the most accurate final answer.

Figure 5. Lifting our mental anchors

This has turned out to be a well-studied phenomenon as psychologists have explored the limits of its effects. Some results have shown that anchoring effects depend on your personality, and others have shown that they depend on your mood.

In fact, there’s still some debate over how anchoring works. Whereas some evidence argues for the original conception that people adjust their estimates from a starting point, others argue for a “selective accessibility” model in which people entertain a variety of specific hypotheses before settling on an answer. Still others have provided evidence suggesting that anchoring works similarly to persuasion.

Overall, however, the anchoring effect appears robust, & when you’re in the throes of numerical estimates, think about whether your answer could have been biased by other numbers floating around.

Problem 2 (adapted from Joyce & Biddle, 1981):

We know that executive fraud occurs & that it has been associated with many recent financial scandals. And, we know that many cases of management fraud go undetected even when annual audits are performed. Do you think that the incidence of significant executive-level management fraud is more than 10 in 1,000 firms (that is, 1 percent) audited by Big Four accounting firms?

Yes, more than 10 in 1,000 Big Four clients have significant executive-level management fraud.

No, fewer than 10 in 1,000 Big Four clients have significant executive-level management fraud.

What is your estimate of the number of Big Four clients per 1,000 that have significant executive-level management fraud? (Fill in the blank below with the appropriate number.)

in 1,000 Big Four clients have significant executive-level management fraud.

Regarding the second problem, people vary a great deal in their final assessment of the level of executive-level management fraud, but most think that 10 out of 1,000 is too low. When I run this exercise in class, half of the students respond to the question that I asked you to answer.

The other half receive a similar problem, but instead are asked whether the correct answer is higher or lower than 200 rather than 10. Most people think that 200 is high. But, again, most people claim that this “anchor” does not affect their final estimate. Yet, on average, people who are presented with the question that focuses on the number 10 (out of 1,000) give answers that are about one-half the size of the estimates of those facing questions that use an anchor of 200. When we are making decisions, any initial anchor that we face is likely to influence our judgments, even if the anchor is arbitrary. That is, we insufficiently adjust our judgments away from the anchor.

Problem 3 (adapted from Tversky & Kahneman, 1981):

Imagine that the United States is preparing for the outbreak of an unusual avian disease that is expected to kill 600 people. Two alternative programs to combat the disease have been proposed. Assume that the exact scientific estimates of the consequences of the programs are as follows.

Program A: If Program A is adopted, 200 people will be saved.

Program B: If Program B is adopted, there is a one-third probability that 600 people will be saved & a two-thirds probability that no people will be saved.

Which of the two programs would you favor?

Turning to Problem 3, most people choose Program A, which saves 200 lives for sure, over Program B. But, again, if I was in front of a classroom, only half of my students would receive this problem. The other half would have received the same set-up, but with the following two options:

Program C: If Program C is adopted, 400 people will die.

Program D: If Program D is adopted, there is a one-third probability that no one will die & a two-thirds probability that 600 people will die.

Which of the two programs would you favor?

Careful review of the two versions of this problem clarifies that they are objectively the same. Saving 200 people (Program A) means losing 400 people (Program C), & Programs B & D are also objectively identical. Yet, in one of the most famous problems in judgment & decision making, most individuals choose Program A in the first set & Program D in the second set (Tversky & Kahneman, 1981). People respond very differently to saving versus losing lives—even when the difference is based just on the “framing” of the choices.

The problem that I asked you to respond to was framed in terms of saving lives, & the implied reference point was the worst outcome of 600 deaths. Most of us, when we make decisions that concern gains, are risk averse; as a consequence, we lock in the possibility of saving 200 lives for sure. In the alternative version, the problem is framed in terms of losses. Now the implicit reference point is the best outcome of no deaths due to the avian disease. And in this case, most people are risk seeking when making decisions regarding losses.

These are just three of the many biases that affect even the smartest among us. Other research shows that we are biased in favor of information that is easy for our minds to retrieve, are insensitive to the importance of base rates & sample sizes when we are making inferences, assume that random events will always look random, search for information that confirms our expectations even when disconfirming information would be more informative, claim a priori knowledge that didn’t exist due to the hindsight bias, & are subject to a host of other effects that continue to be developed in the literature (Bazerman & Moore, 2013).

Sunk cost is a term used in economics referring to non-recoverable investments of time or money. The trap occurs when a person’s aversion to loss impels them to throw good money after bad, because they don’t want to waste their earlier investment. This is vulnerable to manipulation. The more time & energy a cult recruit can be persuaded to spend with the group, the more “invested” they will feel, and, consequently, the more of a loss it will feel to leave that group. Consider the advice of billionaire investor Warren Buffet: “When you find yourself in a hole, the best thing you can do is stop digging” (Levine, 2003).

Hindsight bias is the opposite of overconfidence bias, as it occurs when looking backward in time where mistakes made seem obvious after they have already occurred. In other words, after a surprising event occurred, many individuals are likely to think that they already knew this was going to happen. This may be because they are selectively reconstructing the events. Hindsight bias becomes a problem especially when judging someone else’s decisions. For example, let’s say a company driver hears the engine making unusual sounds before starting her morning routine. Being familiar with this car in particular, the driver may conclude that the probability of a serious problem is small & continue to drive the car. During the day, the car malfunctions, stranding her away from the office. It would be easy to criticize her decision to continue to drive the car because, in hindsight, the noises heard in the morning would make us believe that she should have known something was wrong & she should have taken the car in for service. However, the driver may have heard similar sounds before with no consequences, so based on the information available to her at the time, she may have made a reasonable choice. Therefore, it is important for decision makers to remember this bias before passing judgments on other people’s actions.

Illusory Correlations

The temptation to make erroneous cause-and-effect statements based on correlational research is not the only way we tend to misinterpret data. We also tend to make the mistake of illusory correlations, especially with unsystematic observations. Illusory correlations, or false correlations, occur when people believe that relationships exist between two things when no such relationship exists. One well-known illusory correlation is the supposed effect that the moon’s phases have on human behavior. Many people passionately assert that human behavior is affected by the phase of the moon, & specifically, that people act strangely when the moon is full (Figure 3).

Figure 6. Many people believe that a full moon makes people behave oddly. (credit: Cory Zanker)

There is no denying that the moon exerts a powerful influence on our planet. The ebb & flow of the ocean’s tides are tightly tied to the gravitational forces of the moon. Many people believe, therefore, that it is logical that we are affected by the moon as well. After all, our bodies are largely made up of water. A meta-analysis of nearly 40 studies consistently demonstrated, however, that the relationship between the moon & our behavior does not exist (Rotton & Kelly, 1985). While we may pay more attention to odd behavior during the full phase of the moon, the rates of odd behavior remain constant throughout the lunar cycle.

Why are we so apt to believe in illusory correlations like this? Often we read or hear about them & simply accept the information as valid. Or, we have a hunch about how something works & then look for evidence to support that hunch, ignoring evidence that would tell us our hunch is false; this is known as confirmation bias. Other times, we find illusory correlations based on the information that comes most easily to mind, even if that information is severely limited. And while we may feel confident that we can use these relationships to better understand & predict the world around us, illusory correlations can have significant drawbacks. For example, research suggests that illusory correlations—in which certain behaviors are inaccurately attributed to certain groups—are involved in the formation of prejudicial attitudes that can ultimately lead to discriminatory behavior.

Confirmation Bias

Confirmation bias is a person’s tendency to seek, interpret & use evidence in a way that conforms to their existing beliefs. This can lead a person to make certain mistakes such as: poor judgments that limits their ability to learn, induces changing in beliefs to justify past actions, & act in a hostile manner towards people who disagree with them. Confirmation bias lead a person to perpetuate stereotypes or cause a doctor to inaccurately diagnose a condition.

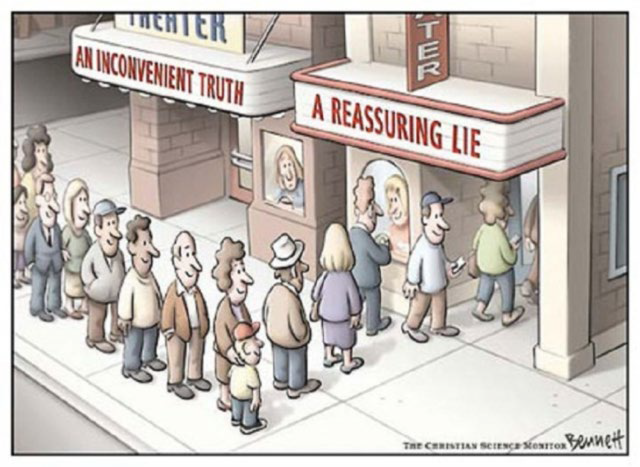

Figure 7. Most people use confirmation bias unwittingly because it is usually easier to cling to a reassuring lie than an inconvenient truth.

What is noteworthy about confirmation bias is that it supports the The Argumentative Theory. Although confirmation bias is almost universally deplored as a regrettable failing of reason in others, the argumentative theory of reason explains that this bias is Adaptive Behavior because it aids in forming persuasive arguments by preventing us from being distracted by useless evidence & unhelpful stories.

Interestingly, Charles Darwin made a practice of recording evidence against his theory in a special notebook, because he found that this contradictory evidence was particularly difficult to remember.

Belief Perseverance bias occurs when a person has clear evidence against, they still hold on to their previous belief. Many people in the skeptic community are often frustrated when, after they have laid out so many sound arguments based on clear reasoning, they still can’t seem to change what someone believes. Once you believe something, it is so easy to see the reasons for why you hold that belief but for others it seems impossible. Try as you might to share your beliefs with others, you still fail at winning them to your side.

“The human understanding when it has once adopted an opinion draws all things else to support & agree with it. And though there be a greater number & weight of instances to be found on the other side, yet these it either neglects & despises, or else by some distinction sets aside & rejects.”

– Francis Bacon

What we are talking about here is, at the least, confirmation bias, the tendency to seek only information that supports one’s previous belief & reject information that refutes it. But there is also the issue of belief perseverance. In other words, Much of this stems from people’s preference for certainty & continuity. We like our knowledge to be consistent, linear, & absolute. “I already came to a conclusion & am absolutely certain that what I believe is true. I no longer want to think about it. If I exert all of the work required to admit that I am wrong & was wrong, there will be a lot of additional work to learn & integrate that new information. In the meantime, I will have a very difficult time functioning. My life will be much easier if I simply accept that my previous belief was true.” Or as Daniel Kahneman says:

“Sustaining doubt is harder work than sliding into certainty.”

– Daniel Kahneman

Problem 1 (adapted from Alpert & Raiffa, 1969):

Listed below are 10 uncertain quantities. Do not look up any information on these items. For each, write down your best estimate of the quantity. Next, put a lower & upper bound around your estimate, such that you are 98 percent confident that your range surrounds the actual quantity. Respond to each of these items even if you admit to knowing very little about these quantities.

The first year the Nobel Peace Prize was awarded

The date the French celebrate “Bastille Day”

The distance from the Earth to the Moon

The height of the Leaning Tower of Pisa

Number of students attending Oxford University (as of 2014)

Number of people who have traveled to space (as of 2013)

2012-2013 annual budget for the University of Pennsylvania

Average life expectancy in Bangladesh (as of 2012)

World record for pull-ups in a 24-hour period

Number of colleges & universities in the Boston metropolitan area

On the first problem, if you set your ranges so that you were justifiably 98 percent confident, you should expect that approximately 9.8, or nine to 10, of your ranges would include the actual value. So, let’s look at the correct answers:

Figure 7. Overconfidence is a natural part of most people’s decision-making process & this can get us into trouble. Is it possible to overcome our faulty thinking? Perhaps. See the “Fixing Our Decisions” section below. [Image: Barn Images, https://goo.gl/BRvSA7]

1. 1901

2. 14th of July

3. 384,403 km (238,857 mi)

4. 56.67 m (183 ft)

5. 22,384 (as of 2014)

536 people (as of 2013)

$6.007 billion

8. 70.3 years (as of 2012)

9. 4,321

10. 52

Count the number of your 98% ranges that actually surrounded the true quantities. If you surrounded nine to 10, you were appropriately confident in your judgments. But most readers surround only between three (30%) & seven (70%) of the correct answers, despite claiming 98% confidence that each range would surround the true value. As this problem shows, humans tend to be overconfident in their judgments.

In 1984, Jennifer Thompson was raped. During the attack, she studied the attacker’s face, determined to identify him if she survived the attack. When presented with a photo lineup, she identified Cotton as her attacker. Twice, she testified against him, even after seeing Bobby Poole, the man who boasted to fellow inmates that he had committed the crimes for which Cotton was convicted. After Cotton’s serving 10.5 years of his sentence, DNA testing conclusively proved that Poole was indeed the rapist.

Thompson has since become a critic of the reliability of eyewitness testimony. She was remorseful after learning that Cotton was an innocent man who was sent to prison. Upon release, Cotton was awarded $110,000 compensation from the state of North Carolina. Cotton & Thompson have reconciled to become close friends, & tour in support of eyewitness testimony reform.

One of the most remarkable aspects of Jennifer Thompson’s mistaken identity of Ronald Cotton was her certainty. But research reveals a pervasive cognitive bias toward overconfidence, which is the tendency for people to be too certain about their ability to accurately remember events & to make judgments. David Dunning & his colleagues (Dunning, Griffin, Milojkovic, & Ross, 1990) asked college students to predict how another student would react in various situations. Some participants made predictions about a fellow student whom they had just met & interviewed, & others made predictions about their roommates whom they knew very well. In both cases, participants reported their confidence in each prediction, & accuracy was determined by the responses of the people themselves. The results were clear: Regardless of whether they judged a stranger or a roommate, the participants consistently overestimated the accuracy of their own predictions.

Eyewitnesses to crimes are also frequently overconfident in their memories, & there is only a small correlation between how accurate & how confident an eyewitness is. The witness who claims to be absolutely certain about his or her identification (e.g., Jennifer Thompson) is not much more likely to be accurate than one who appears much less sure, making it almost impossible to determine whether a particular witness is accurate or not (Wells & Olson, 2003).

I am sure that you have a clear memory of when you first heard about the 9/11 attacks in 2001, & perhaps also when you heard that Princess Diana was killed in 1997 or when the verdict of the O. J. Simpson trial was announced in 1995. This type of memory, which we experience along with a great deal of emotion, is known as a flashbulb memory—a vivid & emotional memory of an unusual event that people believe they remember very well. (Brown & Kulik, 1977).

People are very certain of their memories of these important events, & frequently overconfident. Talarico & Rubin (2003) tested the accuracy of flashbulb memories by asking students to write down their memory of how they had heard the news about either the September 11, 2001, terrorist attacks or about an everyday event that had occurred to them during the same time frame. These recordings were made on September 12, 2001. Then the participants were asked again, either 1, 6, or 32 weeks later, to recall their memories. The participants became less accurate in their recollections of both the emotional event & the everyday events over time. But the participants’ confidence in the accuracy of their memory of learning about the attacks did not decline over time. After 32 weeks the participants were overconfident; they were much more certain about the accuracy of their flashbulb memories than they should have been. Schmolck, Buffalo, & Squire (2000) found similar distortions in memories of news about the verdict in the O. J. Simpson trial.