2-3: Experimental Research Methods

Now we’re getting to the gold standard of research: experiments. If descriptive research is like taking photographs of organizational life, experimental research is like directing a movie. You’re not just observing what happens naturally — you’re systematically manipulating variables to see what causes what.

An experiment is a procedure carried out to support or refute a hypothesis, or determine the efficacy or likelihood of something previously untried. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs when a particular factor is manipulated (Hinkelmann & Kempthorne, 2008). The power of experimental research lies in its ability to establish causal relationships. This is what we call causal inference — the primary goal of experimental research. We want to conduct experiments so that we can infer causality from our studies, moving beyond the limitation that correlation does not imply causation!

Think about it this way: you could observe that successful teams tend to have daily check-in meetings, but that doesn’t tell you whether the meetings cause success or whether successful teams just happen to have more meetings. An experiment could randomly assign some teams to have daily check-ins while others continue their usual routines, then measure performance differences. If the check-in teams perform better, you’ve got evidence for causation rather than just correlation.

Key Terms: Independent and Dependent Variables

Key Terms: Independent and Dependent Variables

In experimental terminology, we have independent variables (IVs) and dependent variables (DVs). The IV is anything that is systematically manipulated by the researcher — it’s the predictor, precursor, antecedent, or cause. The DV is the variable of interest that we design our studies to assess — it serves as the criteria, outcomes, consequences, or effect.

Think of independent variables as the “input” and dependent variables as the “output.” For example, if you’re testing whether flexible work schedules improve job satisfaction, the work schedule is your IV and job satisfaction is your DV. The basic idea is that changes in the IV cause changes in the DV.

In order to establish this basic causal finding, we must address extraneous variables, which brings us to the crucial concept of control.

Control: The Heart of Experimental Design

Extraneous variables (also known as “noise”) are variables that might affect the outcome of a study but are not manipulated by the researcher. The presence of extraneous variables is inevitable — they’re everywhere in real-world settings — but they interfere with your ability to make causal statements.

There are several ways to control extraneous variables:

- Hold them constant (everyone gets tested in the same room, at the same time of day)

- Systematically manipulate them (add them as another IV to your study)

- Statistically control for them (measure them and include them in your analyses)

In a perfect experiment, there is only one thing different between the experimental group and the control group — namely, the presence of the experimental manipulation. In this perfect situation, any differences in the DV between these groups can be said to be caused by the experimental manipulation.

Extraneous Variables Are Bad, But Confounds Are Death

Here’s where things get really tricky: if an extraneous variable varies systematically with the IV, then it becomes a confounding variable. Imagine you tested the experimental group in the morning and the control group in the afternoon. Any differences in the DV could be because of your IV, or because of time of day effects like fatigue, alertness, or different workplace atmospheres!

If a confound is present, you lose your ability to make statements of causality! This is why experimental design requires such careful attention to controlling potential confounding variables.

The Essential Ingredient: Random Assignment

There is one thing that MUST be present in order to have a true experiment: random assignment. Each participant has an equally likely chance of being assigned to any experimental condition. This single feature is what separates true experiments from all other research designs. The requirement of randomization in experimental design was first formally established by R. A. Fisher in 1925, who argued that randomization eliminates bias and permits a valid test of significance (Fisher, 1935).

Random assignment (hopefully!) balances out extraneous variables across groups. It doesn’t guarantee that groups will be perfectly equivalent, especially with small samples, but it does ensure that any differences are due to chance rather than systematic bias. With large enough samples, random assignment creates groups that are statistically equivalent on all variables except your experimental manipulation. This principle forms the foundation of modern experimental design and statistical inference (Basu, 1980).

Types of Experiments

Laboratory Experiments

Laboratory Experiments use random assignment and manipulation of IVs to maximize control. The contrived setting of a lab study allows researchers to eliminate many potential confounding variables. You might bring employees into a lab and test how different lighting conditions affect their performance on various tasks, or how different feedback styles influence motivation and effort.

However, there’s a major limitation: people may act like they “can do” rather than like they “do do” in their natural environments. This is also known as the Hawthorne Effect — people behave differently when they know they’re being studied, potentially making their laboratory behavior unrepresentative of how they’d act in real work situations.

Field Experiments

Field Experiments take advantage of realism to address external validity issues. They use random assignment and manipulation within real-world settings. For example, you might randomly assign half the workers in a factory to work under a new type of lighting (experimental group) while having the other half work under standard lighting (control group), then measure productivity (perhaps number of widgets per hour).

This approach maintains the key feature that enables causal inference (random assignment) while preserving the realistic context of actual work environments. Field experiments often provide the strongest evidence for practical applications because they demonstrate effects in the messy, complex settings where interventions will actually be implemented.

Quasi-Experiments

Quasi-Experiments are studies without random assignment. Random assignment isn’t always practical — you might need to use intact work groups, different departments, or naturally occurring categories. Quasi-experiments are very common in I/O psychology due to organizational constraints and practical limitations.

However, quasi-experiments cannot establish cause and effect because they lack random assignment. In truth, quasi-experiments are just correlational studies in disguise!

For example, consider testing whether height affects productivity. You could categorize workers as either tall or short, then compare the number of widgets per hour produced by each group. Even if you find differences, you can’t conclude that height causes productivity differences because so many other variables could explain the relationship — confidence, social biases, historical factors, or countless unmeasured characteristics that correlate with height.

Internal and External Validity: The Eternal Tradeoff

Internal validity refers to the extent to which we can draw causal inferences about variables. Are the results due to the IV, or could they be explained by confounding variables? Control, control, control is the key to internal validity, which is typically higher in lab studies where researchers can eliminate many potential confounds.

External validity refers to the extent to which results obtained generalize to/across other people, settings, and times. Can we generalize from student samples to employees? From laboratory tasks to real job performance? From one organization to others? External validity is typically higher in field studies where behavior occurs in natural contexts.

There’s an important tradeoff between internal and external validity that researchers must consider (Campbell & Stanley, 1963). Internal validity is to replication as external validity is to generalization. If we have done a good, clean experiment where we control variables well and have high internal validity, then you will probably be able to replicate the findings. If we have measured “real behavior” and have high external validity, then we can generalize the findings from this study to other settings and populations.

The challenge is that the more control you gain (increasing internal validity), the more artificial your setting becomes (potentially decreasing external validity). The more realistic your setting (increasing external validity), the less control you have over confounding variables (potentially decreasing internal validity) (Shadish et al., 2002).

Media Attributions

- Henry Crampton Experimenting © Unknown is licensed under a Public Domain license

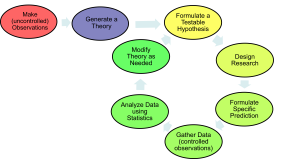

- The Scientific Method © Jay Brown

- Experimental Research Methods © Jay Brown and Copilot