5-2: Selection Decisions

This is where things get really scientific and interesting. Validation is essentially the process of proving that your selection methods actually work — that they can reliably predict who will succeed and who won’t. Without validation, you’re basically hiring based on gut feelings and crossed fingers, which is about as effective as it sounds.

This is where things get really scientific and interesting. Validation is essentially the process of proving that your selection methods actually work — that they can reliably predict who will succeed and who won’t. Without validation, you’re basically hiring based on gut feelings and crossed fingers, which is about as effective as it sounds.

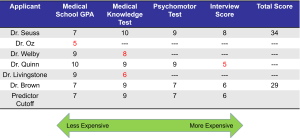

Most smart organizations don’t put all their eggs in one basket. Instead, they use selection batteries — combinations of different assessment methods that give them multiple angles on each candidate. It’s like assembling a jigsaw puzzle where each assessment provides a different piece of the picture. The trick is choosing the right pieces and figuring out how they fit together.

Think of it this way: you want to use tests that add something unique to your prediction while correlating minimally with each other. If all your tests measure basically the same thing, you’re not gaining much by using multiple assessments. Effective selection batteries predict success better than any single test alone.

Validation Approaches

Predictive Validity

Predictive validity is considered the gold standard of proving your selection methods work, but it requires serious patience and commitment. Here’s how it works: you collect data from all your applicants using your new assessment methods, but then you ignore those results and make hiring decisions using your old methods. Then you wait — sometimes for months or even years — to see how the people you hired actually perform. Finally, you go back and analyze whether your new assessment methods would have predicted performance better than your old ones.

This approach gives you rock-solid evidence that your selection methods actually work, but it’s like conducting a long-term scientific experiment every time you want to improve your hiring process. You need patience, resources, and the willingness to potentially make some suboptimal hiring decisions while you’re collecting data.

Concurrent Validity

Concurrent validity is the more practical cousin of predictive validity. Instead of waiting around for future performance data, you give your new assessments to current employees and see how well the results match up with their existing performance ratings. It’s faster, cheaper, and doesn’t require you to potentially make bad hiring decisions in the name of science.

The downside? Current employees might be different from job applicants in important ways — they’ve already been through your selection process and survived, plus they’ve had time to learn and adapt to your organization. And their performance ratings might not perfectly reflect their true capabilities due to various biases and rating errors.

Validity Generalization

Here’s where the science gets really sophisticated: validity generalization uses advanced statistical techniques to combine research findings from multiple studies across different organizations to figure out whether selection methods work consistently across various contexts (Schmidt & Hunter, 1998). Instead of every single organization having to conduct their own expensive validation studies, you can leverage decades of collective research wisdom.

This approach has revealed some pretty amazing patterns. For example, cognitive ability tests predict job performance consistently across virtually all jobs and organizations, while other selection methods might only work well in specific situations. It’s like discovering universal laws of human performance prediction.

Practical Approaches to Selection

Once you’ve proven your selection methods work, you need practical ways to actually use them for making hiring decisions. Your clients don’t just want to know that your selection battery works — they want to know which specific applicants will be successful and they want a cost-effective approach. Two main philosophies have emerged, each with different ideas about how to combine information from multiple assessments.

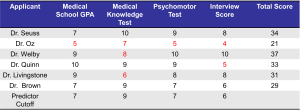

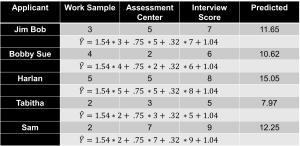

Multiple Cutoff Approach

The multiple cutoff approach is basically the “no excuses” method of selection. You establish minimum standards for every important factor you’re measuring, and candidates have to meet or exceed all of them to even be considered. It’s like college admissions requirements — you might need a minimum GPA AND minimum test scores AND specific coursework. Fall short on any requirement, and you’re out, regardless of how amazing you might be in other areas.

This approach makes perfect sense when every single capability you’re measuring is absolutely critical for success. Think about hiring a pilot — you’d want someone who meets minimum standards in technical knowledge AND flying skills AND decision-making under pressure AND visual acuity. You wouldn’t want to hire someone who’s brilliant technically but freezes up in emergencies, or someone who’s great under pressure but lacks the technical knowledge to handle complex situations.

The multiple hurdle method adds a strategic twist by giving assessments in a specific order. You use cheaper, easier assessments first to screen out obviously unqualified candidates before investing time and money in more expensive evaluations. It’s like having a series of gates that candidates have to pass through — why spend money on expensive assessments for people who won’t meet basic requirements?

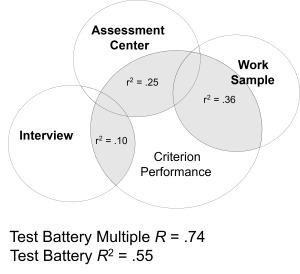

Multiple Regression

Multiple regression takes a more mathematical approach by creating sophisticated prediction equations that optimally weight different factors based on how well they predict success and how they relate to each other (Schmitt, Rogers, Chan, Sheppard, & Jennings, 1997). Instead of rigid pass/fail cutoffs, this method generates predicted performance scores for each candidate, allowing you to rank them from most likely to succeed to least likely.

This approach can squeeze every drop of predictive accuracy out of your assessment data by giving more weight to factors that strongly predict success and less weight to those that barely matter. It’s like having a super-smart algorithm that considers all available information and gives you the best possible forecast of each candidate’s potential.

Many organizations actually use hybrid approaches that combine both philosophies — they establish minimum standards for absolutely critical requirements while using mathematical models to rank candidates who meet all the basic qualifications. For example, you might still want to set a minimum cutoff on each predictor even when using regression (like requiring a minimum work sample score of 4.00), or you might set a cutoff on the predicted criterion score that excludes applicants even if your client needs more employees.

Just be careful of developing overly complicated or arbitrary decision rules. For instance, a rule like “if the intelligence difference between applicants is greater than 10 points, choose the more intelligent one; if it’s 10 points or less, choose the one with more experience” might sound logical but could be unnecessarily complex and hard to defend.

Selection Methods and Their Effectiveness

Decades of research have given us pretty solid evidence about which selection methods actually work for predicting job performance and which ones are basically just expensive guessing games. This research can help organizations choose the most effective approaches for their specific situations.

Cognitive Ability Testing

Cognitive ability tests consistently emerge as among the most powerful predictors of job performance across virtually all types of jobs. Meta-analytic research (which combines findings from multiple studies) shows these tests are particularly strong for predicting how well people will do in training programs, and they provide substantial economic benefits to organizations that use them appropriately (Schmidt & Hunter, 1998).

However, cognitive ability testing faces some serious challenges related to adverse impact. Research consistently shows group differences in average test performance, with some ethnic groups scoring lower and others scoring higher than the general population on average. These differences have led many organizations to abandon cognitive ability testing entirely, despite its high validity, particularly after legal decisions requiring strong business necessity justification for tests that produce adverse impact.

Personality Testing

Personality testing has had a rocky history in personnel selection. For years, it was viewed skeptically due to low predictive validity and concerns about invading people’s privacy. But it’s experienced a comeback as organizations look for alternatives to cognitive ability testing that might reduce adverse impact problems.

Recent research suggests that combining personality measures with cognitive ability tests can provide incremental validity — meaning you get better predictions by using both together than by using either one alone (Bobko, Roth, & Potosky, 1999). However, studies have found an interesting paradox: while personality tests combined with cognitive ability measures produce the highest predictive validity, including cognitive ability with any other predictors may actually increase rather than reduce adverse impact potential.

Interview Validity

Research on interview effectiveness reveals some fascinating patterns based on how interviews are structured and what content they focus on. Structured interviews demonstrate significantly higher validity (0.44) than unstructured interviews (0.33) for predicting job performance (McDaniel, Whetzel, Schmidt, & Maurer, 1994). The research also examined different types of interview content: situational interviews (0.50 validity), job-related interviews (0.39 validity), and psychological interviews (0.29 validity).

Here’s something that might surprise you: individual interviews proved more valid than board interviews (0.43 vs. 0.32) for predicting job performance, regardless of how structured they were (McDaniel et al., 1994). These findings emphasize how important it is to standardize your interview process and focus on job-relevant content if you want to maximize your ability to predict who will succeed.

Contemporary Trends and Future Directions

Personnel selection keeps evolving in response to new technologies, changing workforce demographics, and emerging organizational needs. Current trends include increased focus on attracting and retaining talent in competitive job markets, development of innovative assessment technologies, and growing emphasis on organizational citizenship behaviors in selection decisions.

Research has shown that job candidates who demonstrate higher levels of helping behaviors, speaking up constructively, and loyalty to their organizations receive higher ratings, better salary offers, and stronger recommendations than candidates who show these behaviors to a lesser degree (Podsakoff, Whiting, Podsakoff, & Mishra, 2011). This suggests that selection processes should consider not just whether someone can do the technical aspects of a job, but also whether they’ll contribute to overall organizational effectiveness through positive citizenship behaviors.

The integration of technology in selection processes offers both exciting opportunities and new challenges. While technology can enhance efficiency and standardization, organizations must ensure that technological solutions maintain validity and comply with legal requirements. Additionally, increasing emphasis on diversity, equity, and inclusion requires careful attention to potential bias in selection processes and development of fair and inclusive practices.

Artificial Intelligence and Machine Learning

Emerging technologies like AI and machine learning are starting to transform how we think about personnel selection. These tools can analyze massive amounts of data to identify patterns that human decision-makers might miss, potentially improving prediction accuracy while reducing some types of bias. However, they also raise new questions about fairness, transparency, and the potential for algorithmic bias that we’re still learning how to address.

Video Interviewing and Remote Assessment

The rise of remote work has accelerated adoption of video interviewing and online assessment tools. While these technologies offer convenience and cost savings, organizations must ensure they maintain the validity and fairness of traditional in-person assessments. Plus, there are new considerations like ensuring all candidates have equal access to technology and dealing with potential technical difficulties during assessments.

Social Media and Digital Footprints

Organizations increasingly consider candidates’ social media presence and digital footprints as part of selection processes. This trend raises important questions about privacy, job relevance, and potential bias while offering new sources of information about candidate characteristics and cultural fit. You might be thinking, “Should I clean up my Instagram before applying for jobs?” — and honestly, that’s not a bad idea.

Media Attributions

- Selection © Unknown is licensed under a Public Domain license

- Multiple Cutoff Approach © Jay Brown

- Multiple Hurdle Approach for Selection © Jay Brown

- Using Multiple Predictors © Jay Brown

- Multiple Regression for Job Selection © Jay Brown