5-3: Usefulness of Selection Devices

Not all selection systems are worth the effort and expense. Some provide tremendous value by dramatically improving hiring outcomes, while others are costly exercises that don’t actually help you make better decisions. Understanding what makes selection systems useful helps organizations invest their resources where they’ll get the biggest bang for their buck. The answer to “Is it worth it?” often comes directly from an assessment of utility, and questions of utility are always framed in terms of “how much better is this new system than the one we used to use?”

Not all selection systems are worth the effort and expense. Some provide tremendous value by dramatically improving hiring outcomes, while others are costly exercises that don’t actually help you make better decisions. Understanding what makes selection systems useful helps organizations invest their resources where they’ll get the biggest bang for their buck. The answer to “Is it worth it?” often comes directly from an assessment of utility, and questions of utility are always framed in terms of “how much better is this new system than the one we used to use?”

Decision Accuracy

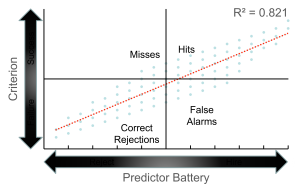

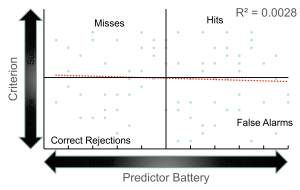

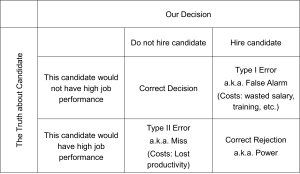

Every selection decision creates one of four possible outcomes, and understanding these outcomes helps you evaluate how well your system is working:

Hits are your home runs — people you hired who turned out to be successful employees. False alarms are your expensive mistakes — people you hired who didn’t work out and had to be replaced. Correct rejections are the bullets you dodged — people you didn’t hire who would have been unsuccessful if you had. Misses are the ones that got away — people you didn’t hire who would have been great employees.

Obviously, you want to maximize hits and correct rejections while minimizing false alarms and misses. But here’s where it gets interesting: different organizations might prioritize these outcomes differently based on the costs and consequences of each type of error.

If you’re hiring air traffic controllers or nuclear power plant operators, false alarms (hiring someone who fails) could literally be catastrophic. These organizations typically use extremely strict selection criteria, accepting that they might miss some potentially good candidates to minimize the risk of hiring someone who could cause disasters.

For other positions, missing good candidates might be more problematic than occasionally hiring someone who doesn’t quite work out, leading to more relaxed selection standards that cast a wider net.

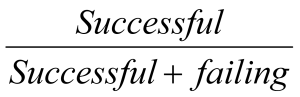

You can actually calculate specific decision accuracy metrics. Decision accuracy for hires looks at the percentage of hiring decisions that are correct: Hits/(Hits + False Alarms). This is particularly important for critical positions like surgeons, police officers, or pilots where you absolutely need to get it right. Overall decision accuracy focuses on maximizing both hits and correct rejections while minimizing misses and false alarms: (Hits + Correct Rejections)/(All Applicants). The challenge is that while hits and false alarms are easy to measure (you know how your hires perform), you know the combined size of misses and correct rejections but not their individual values.

Validity Considerations

The validity of your selection system directly impacts how useful it is. Higher validity means more accurate predictions, which translates to more hits and correct rejections and fewer costly mistakes. When your selection methods lack validity, you’re essentially making random hiring decisions — you might as well flip a coin and save yourself the time and expense. If there’s no correlation between your predictors and actual performance, your selection battery does nothing useful, and you might want to start looking for a new job!

Base Rate and Selection Ratio

Base rate — the percentage of your current employees who are performing successfully — provides important context for evaluating whether new selection systems are worth implementing. If 90% of your current employees are already successful, it’s much harder to demonstrate improvement from a fancy new selection system than if only 60% are meeting expectations.

Selection ratio — the number of job openings divided by the number of applicants — dramatically affects how much value you can get from sophisticated selection systems. When you have tons of applicants for just a few positions (low selection ratio), even small improvements in prediction accuracy can provide huge benefits because you can afford to be extremely picky. When you have few applicants for many openings (high selection ratio), elaborate selection systems provide less value because you don’t have much choice anyway. If your selection ratio is 1.00 (same number of openings as applicants), don’t bother using a selection battery — it has no value!

Think about the difference between Harvard’s admissions process (thousands of applicants for limited spots, making sophisticated selection extremely valuable) versus a fast-food restaurant during a labor shortage (limited applicants for many openings, making elaborate selection procedures less worthwhile). The selection ratio is often used as a measure of exclusivity for universities, and research shows that clinical PhD programs are among the most difficult to get into, though this doesn’t account for how many different schools each applicant applied to or the number of offers rejected.

Cost-Benefit Analysis

Selection systems range from simple and cheap (basic interviews and application reviews) to comprehensive and expensive (extensive testing, assessment centers, multiple interview rounds). The key is making sure the benefits of better hiring decisions outweigh the costs of more sophisticated selection procedures. As selection batteries get larger, they will, by definition, get better at prediction — but they also cost more money.

Organizations like NASA and the FBI invest heavily in selection because the costs of hiring the wrong person far exceed even the most expensive selection procedures. Why does NASA spend billions on selection? Because the consequences of hiring someone who makes critical errors in space missions or national security are catastrophic. For other organizations, simpler approaches might provide the best return on investment.

You can use tools like Taylor-Russell tables to estimate the improvement in your workforce from a new selection battery (Taylor & Russell, 1939), but you still need to answer the crucial questions: What is that improvement worth in dollars? How much will increased productivity offset the costs? How much have you already spent developing and testing the new battery? Does this new system protect you better from lawsuits than your previous approach?

Contemporary research has shown that compensatory selection models generally outperform multiple-hurdle selection models in terms of overall utility, despite the higher costs associated with administering complete predictor batteries to all candidates (Ock & Oswald, 2018). However, organizations must balance this finding against practical considerations such as time constraints and resource limitations.

The validity and utility of selection methods continues to be refined through ongoing research. A comprehensive analysis of 100 years of research findings shows that certain selection methods consistently demonstrate superior predictive validity and utility across different organizational contexts (Schmidt, Oh, & Shaffer, 2016). Organizations should consider these research findings when designing their selection systems to maximize both effectiveness and cost-efficiency.

Media Attributions

- Useful © Unknown is licensed under a Public Domain license

- Decision Accuracy © Jay Brown

- Base Rate © Jay Brown

- Decision (in)Accuracy © Jay Brown

- Decision-Making © Jay Brown