Operant Conditioning I

Module 06 Reading

CHAPTER 6

OPERANT CONDITIONING I: BASIC PRINCIPLES

THE BASICS OF OPERANT CONDITIONING

Theorists

The Model

Four Consequences of Behavior

Positive Reinforcement

Punishment

Negative Reinforcement

Omission Training or Extinction Training

Check Your Learning: The Basics of Operant Conditioning

BEYOND THE BASIC MODEL

Discrimination and Generalization

Shaping

Reinforcement Schedules

Fixed-Ratio (FR) Schedule

Fixed-Interval (FI) Schedule

Variable-Ratio (VR) Schedule

Variable-Interval (VI) Schedule

Extinction

The Extinction Burst

Spontaneous Recovery

Check Your Learning: Beyond the Basics

Learning in the Real World: Superstitious Behavior

Key Terms and Definitions

Online Resources

References

Tables

Figures

The CEO of a small software company has noticed that his employees have developed the habit of arriving to work between 10 minutes and 30 minutes late. And, he’s noticed an increase in the number of sick days taken despite the fact that there are no seasonal reasons for an increase in absenteeism. The company is in the midst of the development of a new release of their software. The earlier they can get the new release to the market, the better chance they have of winning more of the market share from their competitors. Despite the fact that the employees know the importance of getting the release finished, they continue to arrive slightly late to work and to take plenty of sick days.

The CEO wonders what he can do to change these behaviors. Should he decrease the pay of employees who arrive late? Should he reward those who come early or on time? Should he fire an employee for arriving late? What should he do to encourage truly sick employees to stay home and those who are perhaps only marginally under the weather to come into work and get something accomplished? Would an incentive other than pay, perhaps greater flexibility with work hour scheduling, encourage employee attendance and punctuality?

The employees’ behaviors described above are all under their conscious control and are subject to the principles of operant conditioning. In a study of employee absenteeism, Markham, Scott, and McKee (2002) investigated whether a long-term employee recognition program or frequent feedback about absenteeism could improve employee attendance at work. Markham and colleagues found that employees who were part of the employee recognition program responded favorably in their behaviors and attitudes toward work. Employees who worked at a plant with monthly, quarterly, and annual recognition programs for work attendance showed decreased levels of absenteeism across four quarters. In contrast, employees who received reports about their absenteeism or who received no incentive for more frequent attendance did not show consistent decreases in work absenteeism.

What explains these behaviors? Why do some workers arrive late while others arrive early? Why do some take frequent sick days and why do others show up for work every day? You may think the answer is as easy as considering whether the individual enjoys his or her job. But researchers have found that even simple human behaviors, such as when you arrive at work, can be quite complex. Why did the recognition program change employee attendance behaviors and the feedback reports did not?

This chapter addresses the theory, the basic model, and some of the primary concepts related to operant conditioning. You will learn how the consequences of our behaviors determine how likely we are to repeat those behaviors in the future. As you read about the basics of operant conditioning, reflect back on employee absenteeism. Consider why it may be rewarding for some employees to miss work. Consider why some employees think it is unpleasant to miss work. Finally, consider how an employer would implement a program to change employee behavior.

THE BASICS OF OPERANT CONDITIONING

Have you ever curled your hands over the top of a dresser drawer and slammed the drawer shut? If you winced in pain after reading the previous sentence, then you have experienced the negative consequences of a behavior. Most likely you have not forgotten the experience and are now especially careful about the placement of your fingers when shutting drawers and even car doors. Learning as a result of the consequences of our behavior is known as operant conditioning.

Operant conditioning, also known as instrumental conditioning, is the use of consequences to change behaviors either in their manner or in the frequency with which they occur. The change in behavior is considered learning as a result of consequences that are experienced directly by the learner – such as the pain associated with slamming your fingers in a drawer. Unlike classical conditioning, which focuses on changing unconscious behaviors such as salivation or the dilation of the pupils, operant conditioning applies to conscious behaviors, such as driving under the speed limit because you have received too many speeding tickets.

Theorists

We begin by discussing two theorists whose work is fundamental to understanding a different kind of learning that focuses on those behaviors that are under our conscious control: Edward Thorndike and Burrhus F. Skinner.

The study of operant (instrumental) conditioning began at the turn of the 20th century with the work of Edward L. Thorndike (1898-1911). Thorndike began his study of learning by observing how cats escaped from a specially designed cage called a puzzle box (see Figure 6.1). Puzzle boxes were relatively simple devices that allowed an animal to open and escape the box, usually by moving a latch, to get food. Specifically, Thorndike was interested in testing whether cats were able to figure out how to escape a puzzle box using insight or gradual learning. Thorndike hypothesized that if cats (and other animals) could use insight, then the amount of time they took to solve the puzzle box problem and escape should decrease precipitously with learning. Thorndike’s research, however, showed that animals use trial and error. And, reliance on trial and error produced gradual learning. The cats needed time to try various behaviors before determining which response was successful.

[[Insert Figure 6.1, Cat in a Puzzle Box, about here]]

Based on the results of his research on animal learning, Thorndike (1911) proposed three laws of learning:

The law of effect stated that the likelihood of a behavior being repeated depends upon whether the behavior results in a reinforcing consequence. Thus, a child who is rewarded for a certain behavior by being given a special treat is more likely to repeat the behavior that led to the treat. The law of effect has remained the most discussed among those proposed by Thorndike.

The law of recency stated that the most recent behavior is the most likely to be repeated. According to this law, we will travel the same, tried and true route to school or work that we traveled yesterday; our route will change only if we determine that it is ineffective due to road construction, a wreck, or the availability of a new, more desirable route.

Because Thorndike viewed learning as the formation of associations between a behavior and a response, his law of exercise stated that when all else is equal these associations are strengthened through repetition. Applying the law of exercise to motor memory, you may find that you automatically dial a friend’s phone number when you pick up the phone because you dial that number so frequently. This strong association can be a nuisance when it is time to dial another person’s number.

Note that Thorndike focused on the association between behavior and desirable consequences. Thorndike referred to this type of learning as instrumental conditioning. Burrhus Frederick Skinner, who conducted similar research on learning as a result of consequences, used a different term, operant conditioning, to refer to learning from experience. Skinner noted that not all behavior results in satisfying consequences. Thus, when an animal experiences a negative consequence of a behavior, it is less likely to repeat that behavior in the future. Operant conditioning accounts for different types of consequences and their effects on behavior. Hereafter, we will use the term operant conditioning to refer to learning that occurs when an animal changes its behavior based on direct experience with the good or bad consequences of that behavior.

Like Thorndike, Skinner (1904-1990) noticed that the consequences of a behavior affect whether that behavior is repeated. He proposed his own school of thought, called “radical behaviorism,” in which behavior is characterized as a function of an animal’s history with the consequences of behavior. Specifically, Skinner proposed that behaviors are based on a three-part contingency in which (1) some set of conditions precedes and cues a behavior, then (2) the behavior is demonstrated, and (3) a consequence occurs as a result of the behavior. Thus, no behavior occurs in a vacuum. Rather, behaviors are influenced by the environment before and after they occur.

Skinner’s research examined the relationship between the three parts of the behavioral contingency by observing how changes to the environmental cues and consequences of a behavior influenced an animal’s rate of responding (Skinner, 1938). To study how different reinforcements and reinforcement schedules affected the rate of responding, Skinner constructed operant conditioning chambers, called the Skinner Box (see Figure 6.2), that allowed him to control the preceding event (cue) and the timing and frequency of the reinforcement or punishment of a targeted behavior. His work further refined psychologists’ understanding of how behaviors occur in context and how they are reinforced and extinguished. The work of Skinner and Thorndike led to a simple model of learning from experience.

[[Insert Figure 6.2, line art of a Skinner box, about here]]

The Model

The operant conditioning model is simple: It consists of a stimulus, known as the discriminative signal (SD), that sets the occasion for the desired response. The SD acts as a cue that a specific behavior should be demonstrated. For example, the “ding” of the elevator signals that the doors are about to open. The SD also signals a relationship between a behavior (R) and a reinforcer (SR), such as stepping into the elevator when the doors open and riding to the desired floor . Thus, the SD serves as the preceding event, the R as the desired behavioral event, and the as SD the reinforcer in the three-part contingency relationship. The model can be summarized as the following:

SD R SR

In animal studies of operant conditioning, S would have been a stimulus such as a flash of light that signaled the animal to press a lever. Pressing the lever then resulted in the delivery of food pellets to the animal, which was a reinforcer intended to encourage the animal to press the lever to receive food in the future. The trick, however, was for the animal to learn that pressing the lever would result in reinforcement only when the light was illuminated.

What is the real-world context for operant conditioning? A simple observation of driving behavior illustrates the concept nicely. When you are driving and you come to a red light, you put your foot on the brake and wait for the light to turn green. When the signal turns green, you slowly step on the gas and move through the intersection. What is the reinforcer in this example? We could answer that question in several ways. First, moving through the intersection and making progress toward your destination is a form of reinforcement. Additionally, avoiding the onset of honking cars and screaming drivers (negative consequences) are outcomes of stepping on the gas when the light turns green. The latter consequences represent slightly different forms of reinforcement, which we will discuss in more detail in the following section.

Four Consequences of Behavior

In operant conditioning, learning occurs when an animal or human forms an association between the demonstration of a behavior and a particular consequence. Different consequences influence behavior in different ways. Research on operant conditioning has focused on four basic types of consequences: positive reinforcement, punishment, negative reinforcement, and omission training or extinction training. These consequences are intended to increase or decrease a target behavior.

Think Ahead

***Think of one example of positive reinforcement that you have experienced. Did the reinforcement increase your behavior? In your example, can you identify the signal, the behavior, and the consequence? Write down your answer before you read further.

Positive Reinforcement. In simple terms, positive reinforcement is giving the animal or learner something good with the intention of increasing the likelihood that the learner will demonstrate the targeted behavior again in the future. Parents practice positive reinforcement often. Let’s say that a parent wants to encourage his or her two-year old to say “Thank you” when the child is given a gift. If a guest at the child’s birthday party presents the child with the gift, the child may or may not realize that the parent wants the child to politely say “Thank you.” So, the parent says, “What do you say when someone gives you a gift?” This provides the child with a cue (SD) to say “Thank you.” At that point, both the guest who gave the gift and the parent smile at the child and the guest responds, “You are very welcome! How polite you are!” (R). Over time and with repetition, hopefully the presentation of the gift will become the cue (SR) for the child’s “Thank you” response, and the child will continue to be reinforced each time by the gift-giver’s “You’re welcome!” response.

Think Ahead

*** Think of one example of punishment that you have experienced. Did the punishment decrease the behavior it was intended to influence? In your example, can you identify the signal, the behavior, and the consequence? Write down your answer before you read further.

Punishment. Whereas positive reinforcement seeks to increase the likelihood that a behavior will be repeated, punishment is delivered to decrease a behavior. Thus, an unpleasant consequence of a behavior occurs. One everyday example of this type of consequence is a traffic ticket. In theory, a driver who is speeding will receive a speeding ticket, which costs the driver money not only when paying the ticket but also when paying the car insurance bill. Those are aversive consequences by most standards and should discourage the driver from speeding. This widely used punishment is a very different scenario from what we would have if drivers were rewarded with money for driving at or under the speed limit.

Think Ahead

*** Think of a time when something unpleasant was taken away as a result of something you did. After that occurrence, did the removal of this aversive stimulus increase the behavior that made it go away? In your example, can you identify the signal, the behavior, and the consequence? Write down your answer before you read further.

Negative Reinforcement. Although punishment and reinforcement depend on the introduction of a consequence (good or bad) following a behavior, negative reinforcement focuses on removing a consequence. Specifically, negative reinforcement seeks to increase a targeted behavior by taking away an aversive consequence. One of your authors has a difficult time getting out of bed each morning. To encourage herself to get out of bed quickly, she sets her alarm on a loud, annoying buzzing noise and places the clock across the room from the bed. Thus, jumping out of bed and hitting the appropriate button is the fastest way to make the alarm stop shrieking in the morning. Taking away the aversive sound provides negative reinforcement that increases quick, reliable jumping out of bed behavior.

Omission Training or Extinction Training. Omission training and extinction training are similar to negative reinforcement in that both seek to influence a behavior by taking something away. In omission and extinction training, something good is taken away to decrease a target behavior.

“Time-outs” are an example of a behavior modification technique based on omission training. Many parents of young children give their child a time out to discourage the repetition unwanted behavior. For example, suppose a two-year old begins to scream at his father because he wants the father to give him the television remote control. The father, knowing the remote control is likely to be broken or disappear after passing into the toddler’s hands, refuses. The screaming begins. To discourage screaming, an unwanted behavior, as a response to this situation, the father places the child in time out for two minutes. The child is required to sit quietly in a designated place in the home, preferably away from any reinforcing stimuli (e.g., toys within arm’s reach, a television nearby) for two minutes. The father explains the purpose of the time out and the behavior that led to this consequence. The time out removes the child from normal interactions with other individuals and his toys – two things that most children find reinforcing.

When one of your authors was a child, her teachers would often schedule a fun field trip to conclude the school year. The stipulation was that students had to stay out of trouble to go on the field trip. So, the possibility of a field trip was taken away from students who misbehaved in the classroom.

Think Ahead

***In the previous scenario, what is the target behavior and what is being taken away to decrease the frequency of the target behavior? Write down your answers before you read further.

The act of taking away something good (a field trip) to decrease a behavior (classroom misbehavior) is one example of omission training or extinction training. Can you think of other examples?

Consider the examples that you generated for the different types of consequences. Did you identify some examples as one type of consequence, say punishment, that were better examples of a different type of consequence, such as negative reinforcement?

Check Your Learning: The Basics of Operant Conditioning

The model of operant condition is based on the premise that the consequences associated with our behaviors affect how we will behave in the future. Remember that behaviors occur in the context of some kind of cue. Then, the events that follow the behavior determine whether that behavior is more or less likely to be demonstrated in the future.

- Operant conditioning is learning that occurs when a behavior is changed based on the consequences of that behavior. Operant conditioning applies to behaviors that are under the conscious control of the animal or person. Thus, it is distinguished from classical conditioning, which relates to behaviors that are not under one’s conscious control.

- The law of effect states that behaviors followed by reinforcing consequences are more likely to be repeated in the future. When a behavior is followed by a desirable consequence or state of affairs, we are likely to demonstrate that behavior again in the future.

- The law of recency states that the most recently demonstrated behavior is most likely to be repeated in the future. That is, we tend to repeat the response to a stimulus that we most recently demonstrated.

- Radical behaviorism was proposed by Skinner and stated that a behavior is influenced by an organism’s experience with the consequences of that behavior. We form associations between stimuli, our responses, and the consequences of those responses. The consequences help determine whether we will repeat our response in the future.

- The three part contingency is the general model of operant conditioning. In this model a discriminative signal (SD) cues an organism to respond with a specific behavior (R). The response is followed by a reinforcer (SR). The SD, R, and SD constitute the three parts of the behavioral contingency that produce operant conditioning.

- Positive reinforcement is the application of a pleasant stimulus or consequence that is intended to increase the likelihood that the animal or individual will repeat a targeted behavior in the future. In simple terms, positive reinforcement is the addition of something good or pleasurable with the goal of increasing a desired behavior.

- Punishment is the application of a negative stimulus or consequence that is intended to decrease future demonstrations of a targeted behavior. Again, in simple terms, punishment is the addition of something bad or unpleasant with the goal of decreasing a desired behavior.

- Negative reinforcement is the removal of an unpleasant stimulus to encourage future demonstrations of a targeted behavior. Thus, negative reinforcement takes away something unpleasant, which results in a more favorable state of affairs. When the unpleasant stimulus goes away, the desired behavior should increase.

- Omission training or extinction training is the removal of a pleasant stimulus to discourage future demonstrations of a targeted behavior. In this case, taking away something pleasant is intended to decrease an unwanted behavior.

BEYOND THE BASIC MODEL

After establishing the basic effects of operant conditioning, researchers began to examine how different discriminative signals, consequences, and the timing of consequences influence behavior. Specifically, researchers focused on stimulus discrimination, behavioral shaping, reinforcement schedules, and the extinction burst that occurs after a behavior is extinguished.

Discrimination and Generalization

After Thorndike and Skinner presented their findings, researchers began breaking down the elements of the three part contingency to gain a better understanding of how learning due to operant conditioning proceeds. They manipulated the first element of the three part contingency, the discriminative signal (SD), to determine whether animals could learn to respond in the same way to a range of signals or to respond in different ways based on slight variations in the signal.

Discrimination. Guttman and Kalish (1956) conducted a well-known study of how animals respond to different discriminative signals (SD). In their study, pigeons were trained to peck at a yellow light source to receive food reinforcement. Guttman and Kalish were interested in whether the pigeons would continue to peck when tested with light signals that were yellow, green, orange, yellow-green, and yellow-orange (see Figure 6.3). Thus, the researchers wanted to know whether the animals could distinguish between the different colors of light as demonstrated by their responses to the lights. In a series of test trials using the alternate light colors, the researchers measured how many times the pigeons pecked at each color as an indication of how similarly the pigeons perceived the light source to the yellow light used during training.

[[Insert Figure 6.3, drawing of pigeon(s) with colored lights, about here]]

Guttman and Kalish’s (1956) results showed that the pigeons pecked more at lights that were of similar wavelength (measured in nanometers) to the yellow light used during training. Thus, green and orange lights resulted in less pecking than yellow-green and yellow-orange lights. The pigeons demonstrated the most responding when presented with a yellow light of the same wavelength as was used during training. The pattern of responding formed a curve in which the frequency of responding varied by the light wavelength. This curve was referred to as a stimulus-generalization gradient (see Figure 6.4). In summary, the pigeons demonstrated the ability to discriminate between different gradients of a stimulus through an increase or decrease in the frequency of their pecking. The ability to discriminate between the signals changed the way the pigeons responded.

[[Insert Figure 6.4, stimulus-generalization gradient curve, about here]]

Where do we see stimulus discrimination in our daily lives? New parents often find that they learn to distinguish between different types of cries from their newborn infants. Parents (and other close caregivers) of infants report being able to distinguish between cries of hunger, pain, fatigue, discomfort, and frustration. This knowledge is acquired through operant conditioning. The first few days with a newborn include many instances of trial and error to make a baby stop crying. As a new mother, one of your authors was very frustrated when trying to soothe her three-day-old crying infant. After the mother adjusted a loose sock on the infant’s foot, the baby stopped crying and all was well. That was the first instance of reinforcement for the young parent, and she quickly learned that discomfort in her infant was associated with a high-pitched scream. Future experiences with high-pitched crying led to the immediate review of her infant’s socks. Of course, the young parent also learned quickly that a loose sock was not the only type of discomfort that leads to crying. Tags in garments can be irritating to infants, too.

Generalization. Having established that animals can learn to distinguish between different gradients of a stimulus, early researchers examined the converse of this idea, stimulus generalization. Specifically, researchers were interested in whether animals could learn to generalize a response to one type of signal to other, similar signals.

Based on early research, generalization seems to occur when two stimuli are paired together during learning. After exposure to such a pairing, the person or animal exhibits a tendency to respond to each of the individual stimuli as one would respond to the compound stimulus. For example, an animal may be trained to press a lever to receive food reinforcement after perceiving the combination of a tone and light. Later, the animal may demonstrate the lever pressing response when presented only with the tone or only with the light. The combination of the two signals creates an expectation of pending reinforcement. Researchers have referred to this type of learning transfer as meaning-based generalization because the two stimuli are assumed to have the same meaning in terms of their ability to predict reinforcement. Two stimuli that are physically similar, such as lights of similar wavelength, may also demonstrate stimulus generalization. But, this type of generalization is referred to as similarity-based generalization.

Think Ahead

Can you think of an example where we see stimulus generalization in our daily lives? Is your example meaning-based or similarity-base? Write down your answer before you read further.

A practical demonstration of similarity-based stimulus generalization is the application of social stereotypes. That is, we tend to form categories of individuals based on age, gender, and ethnic group membership. For each category of membership, we often associate a few key traits with members of the category. Then, we assume that all members of a category conform to these traits. That is, we generalize the traits to every member of the category. Social stereotyping can be helpful: For example, knowing that family tends to be highly valued in Hispanic cultures may help us understand why a Hispanic coworker takes time off to drive her sister to a medical appointment. Unfortunately, however, our generalizations are often misapplied; we may make assumptions about members of a category without considering the individual differences of those members. As a result, our expectations may be incorrect. For this reason, social stereotyping is often viewed as negative and hurtful to members of the category.

Similarly, individuals who have experienced a traumatic event may find that they generalize feelings of fear and avoidance behaviors to situations and stimuli that are like those that were present during the traumatic event. Let’s say that a cyclist is enjoying his regular early morning ride. Suddenly, the cyclist hears a single bark and the sound of paws running across grass. Within seconds, the cyclist sees a snarling mid-sized dog that immediately lurches for the bike and cyclist and begins to attack. The cyclist is injured in the attack, but eventually is able to fight off the dog. After the frightening attack, the cyclist finds it uncomfortable to ride past the site of the attack. As days and weeks pass, he finds it difficult to ride or drive on the same road near the attack. Eventually, he begins to avoid going near the site of the attack or even driving partially down the street so as to eliminate any uneasy feelings he might have. A few weeks later, he visits the home of a friend and finds that he is uncomfortable around the friend’s small dog. The dog is not the same breed as the one that attacked him, but is a dog, nevertheless. He asks the friend to put the dog in the backyard and vows silently to himself not to visit his friend in his home again.

In this example, the cyclist has generalized the negative feelings associated with the specific dog and location related to the attack to a broader location and other dogs. As such, the cyclist modifies his behavior to remove the unpleasant feelings associated with the attack. Thus, this is an example of stimulus generalization. Although the dog attack scenario may seem far-fetched to those who enjoy the company of friendly dogs, the point is that trauma victims often generalize unpleasant feelings to similar situations.

Shaping

In some cases, it is desirable for animals or people to develop behaviors that they may not display under normal circumstances. Take potty training as an example. In infancy and the early toddler years, children wear diapers because they cannot anticipate the need to expel body wastes. As they grow and their bodies mature, however, children gain the ability to sense the need to use the toilet. In time, they can control these urges and learn to use the toilet as older children and adults do. As all parents and caregivers will attest, using the toilet is a behavior that is not in an infant’s or a toddler’s usual set of behaviors. With some guidance from caregivers and possibly an older sibling, the toddler gradually learns a new and unfamiliar behavior. How does this type of learning happen? The operant conditioning technique of shaping is a common method for getting an animal or human to demonstrate a new behavior that is not within the animal or human’s usual repertoire of behaviors.

How does shaping help an animal or person acquire an entirely new behavior? Shaping is a behavioral intervention in which a person or animal is reinforced for the demonstration of behaviors that successively become more similar to a goal behavior. In the case of potty training, a child may initially be rewarded simply for noticing and announcing to a parent or caregiver that he or she senses the need to use the toilet. However, as shaping continues, the child may be rewarded not simply for noticing the sensation, but for acting on it in increasingly more complex ways. The goal is to shape the child’s behavior so that he or she gradually learns a new behavior that is very different from the currently demonstrated behavior..

Let’s consider a more detailed example of how shaping proceeds. Suppose you want to train your dog to ring a bell hanging from the back door to indicate when he needs to go outside. Your dog has not been in the habit of ringing the bell by hitting it with his nose. So, training this behavior will require some effort – and some small, intermediate steps. You may want to begin by leading the dog to the back door and putting his nose to the bell when you sense it is time for him to go outside (e.g., you see the tell-tale signs of sniffing and pacing). That’s one approach. And, it may work. But, hitting the bell with its nose is not something the dog would do normally. Thus, you are asking the dog to learn a completely new behavior.

A good behaviorist would recognize the goal behavior (hitting a bell with the nose to signal needing to go outside) as one that is very different from the dog’s natural behavior. Thus, it is better to begin the training simply by walking the dog to the back door when you sense it is time for him to go outside. You reinforce this behavior with a treat and then let the dog outside. Each time you reinforce him with a treat. You repeat this step until the dog demonstrates going to the back door reliably. At this point, you change the contingency so that the dog no longer is reinforced simply for going to the back door. Now, you want to introduce the bell. You hang the bell on the doorknob of the back door. To draw the dog’s attention to it, you decide to smear some moist cat food, which dogs seem to love, on the bell. The dog will want to sniff and lick where the cat food has been placed on the bell. When the dog goes to the back door and licks the bell, you say, “Bell.” When the dog licks the bell and it rings, you reinforce him and let him outside. You are now reinforcing the dog for a behavior that is closer to the goal behavior. You repeat this sequence until the dog demonstrates licking the cat food off the bell consistently to indicate it is time to go outside.

Then, you change the stakes for the dog yet again. It is time to reinforce the dog for a new behavior. Now when the dog goes to the bell to lick it, you no longer put cat food on it. Instead, you say, “Bell,” and wait for the dog to lick or nudge the bell before you reinforce him and let him outside. As each behavior is mastered, you successively reinforce new behaviors that gradually get closer in form to the desired behavior (the dog nudges or licks the bell to ring it and signal that it is ready to go outside). Each step in this process requires the owner to reward successive approximations to the goal behavior. Learning is achieved when the dog reliably demonstrates the goal behavior.

Ricciardi, Luiselli, and Camare (2006) used shaping to change the behavior of an 8-year-old boy diagnosed with autism who displayed fear of electronic animated figures. When the boy was about a year and a half old, he began demonstrating intense fear of electronic animated figures such as a dancing Elmo® doll, blinking holiday decorations, and life-size Santa Claus replicas (Ricciardi, Luiselli, & Camare, 2006). The boy displayed fear responses that ranged from screaming, to hitting, and ultimately fleeing the location in which the animated figure was encountered. His fear of these objects was interfering with his life to the point where he was unable to go into stores or visit community locations where such objects might be found. Thus, he, with the help of his parents, avoided the unpleasant stimuli completely.

Ricciardi, Luiselli, and Camare began a behavioral intervention with the boy that focused on shaping his approach behaviors when faced with animatronic figures. The researchers presented the boy with three animatronic toys (a dancing Elmo® doll, a dancing Santa Claus figure, and a jumping Tigger® toy) along with three objects the boy preferred (tools, catalogs, magazines). In a series of 15 sessions, the boy’s approach behaviors toward the animatronic toys were shaped by reinforcing him with access to the preferred objects if he would stand near the feared objects for a specified amount of time. The researchers gradually shaped the distance between the boy and the objects. If the boy was able to stand at a specified distance for a pre-determined amount of time on 90% of the attempts, then the researchers would change the criterion to a closer (shorter) distance. Eventually, the boy was required to touch the figures. Throughout the intervention sessions, the boy was allowed uninterrupted access to the preferred objects, which were placed near the feared animatronic toys.

Three months after treatment, the researchers found that the boy was able to go to stores and events that included animatronic figures (Ricciardi, et al., 2006). Although he sometimes protested visiting these places or events, his parents did not report any additional escape behaviors. Thus, it appears that the researchers successfully shaped his approach behaviors and extinguished his avoidance of animatronic figures.

While it may seem that shaping can take a long time, there are some occasions when a person or an animal may learn a complex series of behaviors through shaping in a short period of time. Shaping is used frequently in behavior modification programs to condition animals and individuals to learn new goal behaviors that may be very different from the current behaviors the animal or individual naturally demonstrates.

Shaping is a technique that therapists can use to change a behavior by changing the response that results in reinforcement. Researchers have also investigated how behavior is affected when the timing and frequency of reinforcement is manipulated. Researchers have identified several reinforcement schedules that produce different patterns of responding.

Reinforcement Schedules

In many early studies of operant conditioning, the animal was given reinforcement every time it demonstrated the desired behavior. This form of reinforcement was referred to as continuous reinforcement. In continuous reinforcement, the demonstration of a target behavior reliably predicted the presentation of a reinforcer. This form of reinforcement typically resulted in continuous, high rates of responding by an animal. However, early researchers were curious whether changing when the reinforcement was delivered would change how animals would respond. The four basic reinforcement schedules are described below.

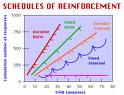

Fixed-Ratio (FR) Schedule. The simplest of the schedules is the fixed–ratio (FR) schedule. When using this schedule, a researcher would present a reinforcer after a fixed number of the desired responses have been demonstrated by the animal or person. For example, a researcher may reinforce a rat after every 10th response. This would be referred to as a FR 10 schedule. A FR reinforcement schedule typically leads to a high rate of responding up to the point of reinforcement. Then, there is a pause in which no responding occurs. This is considered a postreinforcement pause. The pause is usually brief and is followed by another period of rapid responding until the animal or person receives the next reinforcer.

As an example, suppose it is autumn, you have a lot of trees in your yard, and your parents want you to rake up the leaves before it snows. They offer to pay you $5 for each full bag of leaves as soon as you bring it to the garage. If your behavior is typical of people on a FR schedule, you will work quickly to fill a bag, collect your $5, then take a short break before starting to fill the next bag. When you are close to having a bag full, you will work even more quickly in anticipation of the next $5 payment.

The most typical real-life application of the FR schedule is piecework. In piecework, employees are paid by the number of products produced. They demonstrate similar patterns of responding as do animals on a FR schedule. That is, they respond rapidly to generate the number of pieces to receive payment. Then, there is a brief pause before they begin again. The length of the pause is related to the number of behaviors that must be demonstrated before reinforcement. Longer FR schedules are associated with longer postreinforcement pauses. Figure 6.4 shows a hypothetical response curve that one might observe for a FR schedule.

[[Insert Figure 6.5, Responding Patterns for Different Reinforcement Schedules, about here.]]

Fixed-Interval (FI) Schedule. When using a fixed-interval (FI) schedule, the researcher presents the reinforcement after a fixed amount of time, provided that at least one target behavior has been demonstrated. A typical FI schedule may consist of a one-minute interval. The reinforcement will be administered after one minute if the target behavior has been demonstrated during the interval. If the behavior has not been demonstrated, then the reinforcement will become available, but it will not be delivered until the target behavior occurs. After reinforcement, the time interval restarts. This form of reinforcement results in a responding that is relatively low immediately after reinforcement. But, as the time interval passes, responding increases in a burst. Figure 6.5 demonstrates this response pattern.

[[insert Figure 6.5, fixed-interval response curve, about here]]

Where would you find an example of a FI reinforcement schedule in the real world? Many students demonstrate this type of responding when they study. That is, students often will study very little between exams. But, the closer the date for an upcoming exam, the more studying the student will do. Thus, the rate of responding increases as the time interval comes to a close.

Variable-Ratio (VR) Schedule. A variable-ratio, or VR, schedule is similar to the FR schedule in that reinforcement is tied to the demonstration of a behavior and not necessarily to the passage of time. However, unlike the FR schedule, the delivery of reinforcement occurs after the demonstration of an average number of responses. In a VR10 schedule, for example, reinforcement occurs on average every 10 times the behavior is demonstrated.

Where would you find an example of this reinforcement schedule in the real world? The typical example provided for VR schedules is that of a slot machine. Slot machines in casinos pay out on a variable ratio schedule such that, on average, one of every 100 coins fed into the machine will result in jackpot of some size. However, if you have ever visited a casino, you have noticed that you may sit at a machine for hours and spend all of your money only to lose it all. And, the person next to you inserts coins just three times and earns back the money he or she spent and then some. Why would casino owners use a VR schedule to reinforce gambling behavior? The VR schedule, because it is unpredictable, results in a high and consistent frequency of responses. Therefore, the casinos make money from the gamblers. Figure 6.5 shows a graph of the typical response rate associated with a VR schedule.

Variable-Interval (VI) Schedule. Finally, the variable-interval (VI) schedule results in reinforcement after an average amount of time has passed, provided that the targeted behavior has been demonstrated. That is, reinforcement may occur an average of every five minutes. However, reinforcement may occur after two minutes during one interval, again seven minutes later, one more minute later, and then 12 minutes later. Because it is impossible for the subject animal or person to predict when the next time reinforcement will occur, the VI schedule, like the VR schedule, is associated with steady responding (see Figure 6.5).

Where would you find an example of the VI schedule in the real world? Suppose you work at a satellite office for a company. The company may require a regional supervisor to visit your office 26 times per year. Thus, you may guess that the supervisor will visit roughly every two weeks. However, your supervisor may be sneaky and prefer to “pop in” at the satellite office unannounced. In fact, the supervisor has been known to pop in two days in a row and to wait a month or more between visits. Because of this, you and your colleagues are never sure when the supervisor will appear. For the sake of job security, you and your colleagues stay consistently busy doing the company’s work.

The reinforcement schedules discussed here demonstrate that intermittent reinforcement, in which a behavior is not necessarily reinforced each time it is demonstrated, can be used to condition a behavior and maintain it over time. In fact, intermittent reinforcement, in comparison to continuous reinforcement after every behavior, produces behavior that is more resistant to extinction, a concept addressed in the following section.

Extinguishing Learned Behaviors

Getting a behavior to occur is only part of learning; researchers interested in operant conditioning are also interested in how a behavior can be extinguished, or cease to occur.In operant conditioning, a behavior can be extinguished by eliminating reinforcement after the behavior is demonstrated. Over time, the absence of reinforcement should result in the discontinuation of the behavior.

But all behaviors do not extinguish equally easily; the ease of extinction varies according to the reinforcement schedule that was used when the behavior was learned. Behaviors that are learned on a variable-ratio or variable-interval schedule show particular resistance to extinction. For an animal or person to stop demonstrating a behavior, they must learn that the behavior does not lead to reinforcement. Because the variable schedules (variable-ratio or variable-interval) reinforce infrequently and irregularly, it takes an animal or human many trials to determine that the particular behavior no longer leads to reinforcement. Thus, gambling behavior, which is characterized by a variable-ratio reinforcement schedule, is very difficult to extinguish.Teachers often use the technique of stopping reinforcement to eliminate undesirable behaviors. For example, suppose a teacher notices that a particular student cuts up in class to gain the attention of the other students. If the teacher can figure out a way to get class to ignore the student’s antics, which takes away the reinforcement, then the antics should cease.

The Extinction Burst. In the process of examining extinction, researchers noticed an interesting phenomenon called the extinction burst. If you have ever been in a class with a disruptive student (or if you were the student who clowned around), you may have noticed that initially, when the teacher convinces the class to ignore the behavior, the student who is seeking attention merely does more clowning to get attention. Thus, the behavior suddenly increases in frequency. This increase is known as an extinction burst. The wise teacher encourages the class to continue to ignore the behavior during the period, as it is normally very brief. Eventually, the student’s disruptive behavior should be eliminated if the reinforcement is taken away.

Spontaneous Recovery. Extinction, however, is not the end of the story, for a behavior that was previously extinguished may sometimes recur without any further association with reinforcement or punishment. This reemergence of the behavior is known as spontaneous recovery. Spontaneous recovery may occur when cues associated with a behavior are present. For example, an alcoholic who has stopped drinking may find it very difficult to resist drinking if he or she sits down in a bar. In these cases, the previously extinct drinking behavior may recur and at a level (frequency or amount) slightly lower than what was demonstrated when the individual was actively engaged in their addictive behaviors previously. However, the danger of any level of relapse for an addict is significant.

There is some evidence that spontaneous recovery may be avoided following extinction. Lehrman, Kelley, van Camp, and Roane (1999) examined the effect of magnitude of reinforcement on the spontaneous recovery of a previously extinguished behavior. In this study, the authors examined the disruptive behavior, which included screaming and loud clapping, of a 21-year-old woman who had been diagnosed with severe mental retardation. In behavior modification programs, therapists often try to extinguish an unwanted behavior by reinforcing a more positive, desirable behavior that is incompatible with the unwanted behavior. That is, the therapist works to encourage a desirable behavior that, if demonstrated, makes it impossible for the individual to engage in the unwanted behavior.

In Lehrman et al.’s study, the 21-year-old woman was reinforced for being quiet, a behavior that is incompatible with screaming or clapping loudly. The manipulation of interest in this study was the amount of reinforcement provided to the young woman when she engaged in the desirable behavior. The therapist had discovered that gaining access to toys was the reinforcement that maintained the screaming and clapping behavior. The woman would engage in the disruptive behaviors until she was placated with the presentation of a toy. To remove the reinforcement, the therapist removed access to the toys. Then, during a series of intervention sessions, the therapist reintroduced access to the toys as a reinforcement for quiet, non-disruptive behavior. On alternating days, the young woman was allowed 10 seconds of access to the toys. On the other days, she was granted 60 seconds of access to the toys. Thus, the difference in reinforcement magnitude was six-fold.

Observations made across 120 sessions showed that the large magnitude reinforcement sessions (60 seconds of toy access) resulted in rapid reductions in unwanted behavior and few instances of spontaneous recovery. However, sessions in which the young woman was reinforced with 10 seconds of toy access demonstrated periods of spontaneous recovery of disruptive screaming behavior. Lehrman and colleagues concluded that the magnitude of the reinforcement of the new, more desirable behavior may decrease instances of spontaneous recovery of unwanted behavior.

Finally, researchers have also examined the mechanism of re-learning. As in classical conditioning, if the relationship between the response and consequence recurs, then learning will be rapid and the behavior will be strengthened. Suppose, for example, that you learned to play golf when you were younger. You spent hours in private lessons and on the driving range trying to make good contact with the ball. After much practice, you finally developed the ability to hit the ball. You often reflect on your days playing golf by remembering the “ping!” when you hit the ball squarely and the satisfaction you felt in sending a small ball many yards down a nice, green fairway. Currently, you are too busy to hit the links because you study in your free time. One day, a friend asks you to play a round of golf. Because you are caught up on your studying you have no reason not to take him up on his offer. Although you feel a little rusty, you find that you settle right back into the swing of things, so to speak, with a few practice swings on the range. The familiar “ping!” occurs and you feel satisfied watching the ball sail through the air. The act of swinging the golf club and making good contact with the ball comes back to you quickly. You may be able to think of other instances in which something you learn comes back to you very quickly with the right experience.

Check Your Learning: Beyond the Basics

Researchers have established that the individual parts of the three-part contingency can be manipulated in different ways to change behavior.

- Discrimination occurs when an animal or individual learns to discriminate between different forms of a stimulus. The animal or individual learns which form of the stimulus leads to reinforcement and which form does not. One example discussed in this chapter is the young parent’s ability to distinguish between different types of infant cries and the motivations of each.

- Generalization is the opposite of discrimination. It occurs when an animal or individual learns to respond in the same way to similar, but not identical stimuli. One example of stimulus generalization is the fact that drivers react in the same way to stop lights that are vertically oriented, horizontally oriented, bright, dim, etc. Drivers have learned to generalize their driving responses regardless of slight variations in stop lights.

- Shaping is a form of operant learning in which an animal or individual learns a complex set of behaviors through the reinforcement of successive approximations to the complex goal behavior. Shaping has been used to help individuals learn new behaviors that they do not normally demonstrate. For example, a teacher may use small rewards to shape a shy child to engage in more outgoing behaviors with his or her classmates.

- Continuous reinforcement is the delivery of reinforcement every time a desired behavior is demonstrated. Continuous reinforcement generally results in fast learning that is resistant to extinction.

- The fixed-ratio schedule of reinforcement provides reinforcement after a set number of behaviors are demonstrated. Piecework in which an individual is paid by the number of items produced is the typical example of a fixed-ratio schedule of reinforcement. Many “work at home” offers on television relate to manufacturing piecework that pays an individual based on the number units produced. In a fixed-ratio schedule, reinforcement would occur only after a minimum number of pieces are produced.

- A post-reinforcement pause is the brief pause in responding that occurs immediately after reinforcement.

- A fixed-interval schedule of reinforcement provides reinforcement after a specific time period has passed provided that the desired behavior has occurred at least one time. Paychecks that arrive once per month (or perhaps every two weeks) as long as the employee has worked are frequently cited as examples of a fixed-interval reinforcement schedule.

- The variable-ratio schedule of reinforcement provides reinforcement after an average number of desired behaviors have been demonstrated. As described earlier in the chapter, slot machines follow a variable-ratio schedule in which reinforcement occurs occasionally, but after an average number of behaviors. The fact that the individual or animal does not know when reinforcement will occur produces a high rate of responding.

- The variable-interval schedule of reinforcement provides reinforcement after an average amount of time provided that the desired behavior has been demonstrated at least one time. Earlier in the chapter, surprise visits by an off-site manager were used to demonstrate this type of reinforcement schedule. Students of psychology often recognize a variable-interval schedule of reinforcement in pop quizzes. That is, if an instructor has warned students that 10 pop quizzes will be administered over the span of a 16-week semester, then the students may determine that the quizzes will occur, on average, a little less than once per week. However, when the pop quizzes are distributed in a truly random manner across the 16-week semester, the student never knows when the event will occur. This leads to a steady rate of studying (responding), which is the goal of the instructor.

- Intermittent reinforcement is the converse of continuous reinforcement. In intermittent reinforcement, reinforcement does not occur each time the behavior is demonstrated.

- Extinction is the elimination of a behavior by removing the reinforcement that maintains it. Behavior modification specialists sometimes find it difficult to determine what is maintaining (through reinforcement) an unwanted behavior. Thus, the first step in extinguishing an unwanted behavior is determining how it is reinforced.

- An extinction burst is a temporary increase in the frequency of a behavior that is being extinguished.

- Spontaneous recovery is the recovery of a behavior after it has been extinguished. Typically, the recovered behavior occurs at a level slightly lower in frequency or magnitude than it was demonstrated previously.

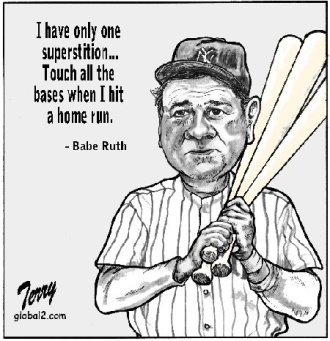

Learning in the Real World: Superstitious Behavior and Uncertainty

As stated at the beginning of the chapter, Thorndike believed that learning was based on the development of associations between behaviors and their consequences. In continuous reinforcement, the reinforcement occurs every time a behavior is demonstrated. Thus, it is easy for one to learn the association between the behavior and a particular consequence. Life is often not as predictable. That is, we sometimes experience intermittent reinforcement of our behaviors. With intermittent reinforcement, the correlation between a behavior and the occurrence of reinforcement is weaker, but we still learn the association eventually. However, we may also associate a behavior with an outcome when the two occur together only randomly – when there is no actual cause-effect relationship between the behavior and the outcome. When our behavior changes based on that random association, we have developed a superstition.

One of your authors was once a chemical engineering major long before pursuing a degree in psychology. As part of the chemical engineering degree program, the author, hereafter “student,” was required to take a course in physics. The student found this course exceedingly difficult. Despite studying aggressively, the student entered the final exam with an F average for the course. However, the professor for the physics course promised that any student earning a letter grade on the exam higher than their end-of-semester average would receive that letter grade as the final grade for the course. The student continued to study for the final exam and found the information as confusing as ever. On the day of the final, the student woke late and quickly dressed in cutoff shorts, a sweatshirt, and tennis shoes. During the final, the student worked diligently on each problem, feeling all the while that the questions did not make sense. When the student’s grade report arrived at the end of the semester, there was a B in physics where an F was expected. From that point forward, the student wore the same “lucky” shorts, sweatshirt, and tennis shoes to every final exam through graduate school.

Why did the student associate the success on the final exam with the clothing and not the month of studying? This is an example of how we learn superstitious behaviors. That is, a person forms an association between a behavior and an outcome that occurred together only randomly. However, the person interprets the co-occurrence as meaningful.

Skinner (1947) documented superstitious behaviors in pigeons. He placed several pigeons in Skinner boxes and set the device to deliver food every 15 seconds. The birds were not reinforced for a specific behavior. He returned later to find that birds demonstrating odd behaviors such as turning in circles or pecking aimlessly. Skinner described the behaviors as “rituals” that were performed between food reinforcement deliveries. He identified these behaviors as superstitious and explained them in the following way. Essentially, the pigeons repeated behaviors that they demonstrated naturally just before the reinforcement occurred. Although the reinforcement was not contingent on a particular behavior, the birds developed an association between the random behavior and the presentation of reinforcement.

It seems that Skinner’s pigeons and humans have a strong desire to understand the cause and effects of behaviors. When we are uncertain, we tend to find relationships among events, behaviors, and consequences. Malinowski (1954) was one of the first researchers to report that superstitious behavior among humans is related to the degree of perceived uncertainty or unreliability of an event. The fact that many professional athletes engage in superstitious behaviors tends to bear this out. Gmelch (1971) reported that superstitious behaviors among baseball players were more common in relation to hitting, which has a low success rate, than in relation to fielding, which enjoys a much higher success rate.

[[Insert Figure 6.6, Superstitious Behavior Among Athletes, about here.]]

Like professional athletes, students often face evaluation scenarios in which an important outcome hinges on their performance. In a more recent study, Rudski and Edwards (2007) found that students reported using rituals and charms more frequently when faced with high-stakes events. An interesting finding of the Rudski and Edwards study was that students were aware of that the causal effectiveness of the rituals and charms was weak, yet they still reported frequent use. Thus, it seems that our learned associations may persist and drive our behavior despite the fact that we know they are not effective.

The next time you catch yourself engaging in superstitious behavior, think about how you learned that behavior and whether it is reinforced consistently or inconsistently.

Online Resources

Read about behavior modification strategies based on operant conditioning and the application of these strategies to the classroom.

http://www.ldonline.org/article/6030

Visit the website devoted applied behavior analysis.

http://www.shapingbehavior.com/

Watch an interview with B. F. Skinner as he discusses operant conditioning in pigeons.

http://www.youtube.com/watch?v=I_ctJqjlrHA

Read the Human Society’s discussion of positive reinforcement and shaping when training animals.

http://www.humanesociety.org/animals/dogs/tips/dog_training_positive_reinforcement.html

Read B. F. Skinner’s paper on superstition in the pigeon.

http://psychclassics.yorku.ca/Skinner/Pigeon/

Read Chapter 5 from E. L. Thorndike’s Animal Intelligence. This chapter summarizes Thorndikes laws.

http://psychclassics.yorku.ca/Thorndike/Animal/chap5.htm

View a video that shows a cat learning to escape one of Thorndike’s puzzle boxes.

http://www.youtube.com/watch?v=BDujDOLre-8

Key Terms and Definitions

Operant conditioning

Learning that occurs when a behavior is changed based on the consequences that behavior; applies to behaviors that are under the conscious control of the animal or person.

The law of effect

States that behaviors followed by reinforcing consequences are more likely to be repeated in the future.

The law of recency

States that the most recently demonstrated behavior is most likely to be repeated in the future.

Radical Behaviorism

Skinner’s idea that behavior is influenced by an organism’s experience with the consequences of that behavior.

The three part contingency

The general model of operant conditioning: A discriminative signal (SD) cues an organism to respond with a specific behavior (R). The response is followed by a reinforcer (SR). The SD, R, and SD are the three parts of the behavioral contingency that produces operant conditioning.

Positive reinforcement

The application of a pleasant stimulus or consequence, intended to increase the likelihood that the animal or individual will repeat a targeted behavior in the future.

Punishment

The application of a negative stimulus or consequence, intended to decrease future demonstrations of a targeted behavior.

Negative reinforcement

The removal of an unpleasant stimulus or consequence, intended to to encourage future demonstrations of a targeted behavior.

Omission training or extinction training

The removal of a pleasant stimulus or consequence, intended to discourage future demonstrations of a targeted behavior.

Discrimination

Occurs when an animal or individual learns to tell the difference between two or more forms of a stimulus; the animal or individual learns which form leads to reinforcement.

Generalization

Occurs when an animal or individual learns to respond in the same way to similar, but not identical stimuli.

Shaping

A form of operant learning in which an animal or individual learns a complex set of behaviors through the reinforcement of successive approximations to the complex goal behavior.

Continuous reinforcement

The delivery of reinforcement every time a desired behavior is demonstrated.

Fixed-ratio (FR) schedule

Provides reinforcement after a set number of behaviors are demonstrated.

Post-reinforcement pause

The brief cessation of responding that occurs immediately after reinforcement.

Fixed-interval (FI) schedule

Provides reinforcement after a specific time period has passed ,provided that the desired behavior has occurred at least one time.

Variable-ratio (VR)schedule

Provides reinforcement after an average number of desired behaviors have been demonstrated.

Variable-interval (VI) schedule

Provides reinforcement after an average amount of time provided that the desired behavior has been demonstrated at least one time.

Intermittent reinforcement

Converse of continuous reinforcement; schedule in which reinforcement does not occur each time the behavior is demonstrated.

Extinction

The elimination of a behavior by removing the reinforcement that maintains it.

Extinction burst

The temporary increase in the frequency of a behavior that is being extinguished.

Spontaneous recovery

The recovery of a behavior after it has been extinguished.

References

Gmelch, G. (1971). Baseball magic. In G. Stone (Ed.), Games, sport, and conflict (pp. 346-352). Boston, MA: Little Brown.

Guttman, N., & Kalish, H. (1956). Discriminability and stimulus generalization. Journal of Experimental Psychology, 51, 79-88.

Lerman, D. C., Kelley, M. E., Van Camp, C. M., & Roane, H. S. (1999). Effects of reinforcement magnitude on spontaneous recover. Journal of Applied Behavior Analysis, 32(2), 197-200.

Malinowski, B. (1954). Magic, science, and religion and other essays. Garden City, NY: Doubleday.

Markham, S. E., Scott, K. D., & McKee, G. H. (2002). Recognizing good attendance: A longitudinal, quasi-experimental field study. Personnel Psychology, 55, 639-660.

Ricciardi, J. N., Luiselli, J. K., & Camare, M. (2006). Shaping approach responses as intervention for specific phobia in a child with Autism. Journal of Applied Behavior Analysis, 39, 445-448.

Rudski, J. M., & Edwards, A. (2007). Malinowski goes to college: Factors influencing students’ use of ritual and superstition. The Journal of General Psychology, 134(2), 389-401.

Skinner, B. F. (1938). The behavior of organisms: An experimental analysis. New York: Appleton-Century-Crofts.

Skinner, B. F. (1947). “Superstition” in the pigeon. Journal of Experimental Psychology, 38, 168-172.

Thorndike, E. L. (1911). Animal intelligence: Experimental studies. New York: Macmillan.

Tables [there are no tables for this chapter]

Figures

Figure 6.1 Edward Thorndike’s puzzle box

Figure 6.2 Skinner Box

Figure 6.3, drawing of pigeon(s) with colored lights [to be rendered]

Figure 6.4, stimulus-generalization gradient curve [to come]

Figure 6.5

Responding Patterns for Different Reinforcement Schedules

Figure 6.6

Superstitious Behavior Among Athletes

Source: copyright Marvin Terry. Published in Baseball Almanac.