13.2 Language & Culture

Perspectives: An Open Invitation to Cultural Anthropology 2 e

Linda Light, California State University, Long Beach

Linda.Light@csulb.edu

Learning Objectives

-

Explain the relationship between human language and culture.

-

Identify the universal features of human languages and the design features that make them unique.

-

Describe the structures of language: phonemes, morphemes, syntax, semantics, and pragmatics.

-

Assess the relationship between language variations and ethnic or cultural identity.

-

Explain how language is affected by social class, ethnicity, gender and other aspects of identity.

-

Evaluate the reasons why languages change and efforts that can be made to preserve endangered languages.

THE IMPORTANCE OF HUMAN LANGUAGE TO HUMAN CULTURE

Students in my cultural anthropology classes are required to memorize a six-point thumbnail definition of culture, which includes all of the features most anthropologists agree are key to its essence. Then, I refer back to the definition as we arrive at each relevant unit in the course. Here it is—with the key features in bold type.

Culture is:

- An integrated system of mental elements (beliefs, values, worldview, attitudes, norms), the behaviors motivated by those mental elements, and the material items created by those behaviors;

- A system shared by the members of the society;

- 100 percent learned, not innate;

- Based on symbolic systems, the most important of which is language;

- Humankind’s most important adaptive mechanism, and

- Dynamic, constantly changing.

This definition serves to underscore the crucial importance of language to all human cultures. In fact, human language can be considered a culture’s most important feature since complex human culture could not exist without language and language could not exist without culture. They are inseparable because language encodes culture and provides the means through which culture is shared and passed from one generation to the next. Humans think in language and do all cultural activities using language. It surrounds our every waking and sleeping moments, although we do not usually think about its importance. For that matter, humans do not think about their immersion in culture either, much as fish, if they were endowed with intelligence, would not think much about the water that surrounds them. Without language and culture, humans would be just another great ape. Anthropologists must have skills in linguistics so they can learn the languages and cultures of the people they study.

All human languages are symbolic systems that make use of symbols to convey meaning. A symbol is anything that serves to refer to something else, but has a meaning that cannot be guessed because there is no obvious connection between the symbol and its referent. This feature of human language is called arbitrariness. For example, many cultures assign meanings to certain colors, but the meaning for a particular color may be completely different from one culture to another. Western cultures like the United States use the color black to represent death, but in China it is the color white that symbolizes death. White in the United States symbolizes purity and is used for brides’ dresses, but no Chinese woman would ever wear white to her wedding. Instead, she usually wears red, the color of good luck. Words in languages are symbolic in the same way. The word key in English is pronounced exactly the same as the word qui in French, meaning “who,” and ki in Japanese, meaning “tree.” One must learn the language in order to know what any word means.

THE BIOLOGICAL BASIS OF LANGUAGE

The human anatomy that allowed the development of language emerged six to seven million years ago when the first human ancestors became bipedal—habitually walking on two feet. Most other mammals are quadrupedal—they move about on four feet. This evolutionary development freed up the forelimbs of human ancestors for other activities, such as carrying items and doing more and more complex things with their hands. It also started a chain of anatomical adaptations. One adaptation was a change in the way the skull was placed on the spine. The skull of quadrupedal animals is attached to the spine at the back of the skull because the head is thrust forward. With the new upright bipedal position of pre-humans, the attachment to the spine moved toward the center of the base of the skull. This skeletal change in turn brought about changes in the shape and position of the mouth and throat anatomy.

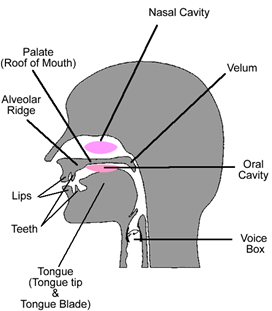

Humans have all the same organs in the mouth and throat that the other great apes have, but the larynx, or voice box (you may know it as the Adam’s apple), is in a lower position in the throat in humans. This creates a longer pharynx, or throat cavity, which functions as a resonating and amplifying chamber for the speech sounds emitted by the larynx. The rounding of the shape of the tongue and palate, or the roof of the mouth, enables humans to make a greater variety of sounds than any great ape is capable of making (see Figure 1).

Speech is produced by exhaling air from the lungs, which passes through the larynx. The voice is created by the vibration of the vocal folds in the larynx when they are pulled tightly together, leaving a narrow slit for the air to pass through under pressure. The narrower the slit, the higher the pitch of the sound produced. The sound waves in the exhaled air pass through the pharynx then out through the mouth and/or the nose. The different positions and movements of the articulators—the tongue, the lips, the jaw—produce the different speech sounds.

Along with the changes in mouth and throat anatomy that made speech possible came a gradual enlargement and compartmentalization of the brain of human ancestors over millions of years. The modern human brain is among the largest, in proportion to body size, of all animals. This development was crucial to language ability because a tremendous amount of brain power is required to process, store, produce, and comprehend the complex system of any human language and its associated culture. In addition, two areas in the left brain are specifically dedicated to the processing of language; no other species has them. They are Broca’s area in the left frontal lobe near the temple, and Wernicke’s area, in the temporal lobe just behind the left ear.

Language Acquisition in Childhood

Linguist Noam Chomsky proposed that all languages share the properties of what he called Universal Grammar (UG), a basic template for all human languages, which he believed was embedded in our genes, hard-wiring the brains of all human children to acquire language. Although the theory of UG is somewhat controversial, it is a fact that all normally developing human infants have an innate ability to acquire the language or languages used around them. Without any formal instruction, children easily acquire the sounds, words, grammatical rules, and appropriate social functions of the language(s) that surround them. They master the basics by about age three or four. This also applies to children, both deaf and hearing, who are exposed to signed language.

If a child is not surrounded by people who are using a language, that child will gradually lose the ability to acquire language naturally without effort. If this deprivation continues until puberty, the child will no longer be biologically capable of attaining native fluency in any language, although they might be able to achieve a limited competency. This phenomenon has been called the Critical Age Range Hypothesis. A number of abused children who were isolated from language input until they were past puberty provide stark evidence to support this hypothesis. The classic case of “Genie” is an example of this evidence.[1]

Found at the age of almost 14, Genie had been confined for all of her life to her room and, since the age of two, had been tied to a potty chair during the day and to a crib at night with almost no verbal interaction and only minimal attention to her physical needs. After her rescue, a linguist worked with her intensively for about five years in an attempt to help her learn to talk, but she never achieved language competence beyond that of a two-year old child. The hypothesis also applies to the acquisition of a second language. A person who starts the study of another language after puberty will have to exert a great deal of effort and will rarely achieve native fluency, especially in pronunciation. There is plenty of evidence for this in the U.S. educational system. You might very well have had this same experience. It makes you wonder why our schools rarely offer foreign language classes before the junior high school level.

The Gesture Call System and Non-Verbal Human Communication

All animals communicate and many animals make meaningful sounds. Others use visual signs, such as facial expressions, color changes, body postures and movements, light (fireflies), or electricity (some eels). Many use the sense of smell and the sense of touch. Most animals use a combination of two or more of these systems in their communication, but their systems are closed systems in that they cannot create new meanings or messages. Human communication is an open system that can easily create new meanings and messages. Most animal communication systems are basically innate; they do not have to learn them, but some species’ systems entail a certain amount of learning. For example, songbirds have the innate ability to produce the typical songs of their species, but most of them must be taught how to do it by older birds.

Great apes and other primates have relatively complex systems of communication that use varying combinations of sound, body language, scent, facial expression, and touch. Their systems have therefore been referred to as a gesture-call system. Humans share a number of forms of this gesture-call, or non-verbal system with the great apes. Spoken language undoubtedly evolved embedded within it. All human cultures have not only verbal languages, but also non-verbal systems that are consistent with their verbal languages and cultures and vary from one culture to another. We will discuss the three most important human non-verbal communication systems.

Kinesics

Kinesics is the term used to designate all forms of human body language, including gestures, body position and movement, facial expressions, and eye contact. Although all humans can potentially perform these in the same way, different cultures may have different rules about how to use them. For example, eye contact for Americans is highly valued as a way to show we are paying attention and as a means of showing respect. But for the Japanese, eye contact is usually inappropriate, especially between two people of different social statuses. The lower status person must look down and avoid eye contact to show respect for the higher status person.

Facial expressions can convey a host of messages, usually related to the person’s attitude or emotional state. Hand gestures may convey unconscious messages, or constitute deliberate messages that can replace or emphasize verbal ones.

Proxemics

Proxemics is the study of the social use of space, specifically the distance an individual tries to maintain around himself in interactions with others. The size of the “space bubble” depends on a number of social factors, including the relationship between the two people, their relative status, their gender and age, their current attitude toward each other, and above all their culture. In some cultures, such as in Brazil, people typically interact in a relatively close physical space, usually along with a lot of touching. Other cultures, like the Japanese, prefer to maintain a greater distance with a minimum amount of touching or none at all. If one person stands too far away from the other according to cultural standards, it might convey the message of emotional distance. If a person invades the culturally recognized space bubble of another, it could mean a threat. Or, it might show a desire for a closer relationship. It all depends on who is involved.

Paralanguage

Paralanguage refers to those characteristics of speech beyond the actual words spoken. These include the features that are inherent to all speech: pitch, loudness, and tempo or duration of the sounds. Varying pitch can convey any number of messages: a question, sarcasm, defiance, surprise, confidence or lack of it, impatience, and many other often subtle connotations. An utterance that is shouted at close range usually conveys an emotional element, such as anger or urgency. A word or syllable that is held for an undue amount of time can intensify the impact of that word. For example, compare “It’s beautiful” versus It’s beauuuuu-tiful!” Often the latter type of expression is further emphasized by extra loudness of the syllable, and perhaps higher pitch; all can serve to make a part of the utterance more important. Other paralinguistic features that often accompany speech might be a chuckle, a sigh or sob, deliberate throat clearing, and many other non-verbal sounds like “hm,” “oh,” “ah,” and “um.”

Most non-verbal behaviors are unconsciously performed and not noticed unless someone violates the cultural standards for them. In fact, a deliberate violation itself can convey meaning. Other non-verbal behaviors are done consciously like the U.S. gestures that indicate approval, such as thumbs up, or making a circle with your thumb and forefinger—“OK.” Other examples are waving at someone or putting a forefinger to your lips to quiet another person. Many of these deliberate gestures have different meanings (or no meaning at all) in other cultures. For example, the gestures of approval in U.S. culture mentioned above may be obscene or negative gestures in another culture.

Try this: As an experiment in the power of non-verbal communication, try violating one of the cultural rules for proxemics or eye contact with a person you know. Choosing your “guinea pigs” carefully (they might get mad at you!), try standing or sitting a little closer or farther away from them than you usually would for a period of time, until they notice (and they will notice). Or, you could choose to give them a bit too much eye contact, or too little, while you are conversing with them. Note how they react to your behavior and how long it takes them to notice.

HUMAN LANGUAGE COMPARED WITH THE COMMUNICATION SYSTEMS OF OTHER SPECIES

Human language is qualitatively and quantitatively different from the communication systems of all other species of animals. Linguists have long tried to create a working definition that distinguishes it from non-human communication systems. Linguist Charles Hockett’s solution was to create a hierarchical list of what he called design features, or descriptive characteristics, of the communication systems of all species, including that of humans.[2] Those features of human language not shared with any other species illustrate exactly how it differs from all other species.

Hockett’s Design Features

The communication systems of all species share the following features:

1. A mode of communication by which messages are transmitted through a system of signs, using one or more sensory systems to transmit and interpret, such as vocal-auditory, visual, tactile, or kinesic;

2. Semanticity: the signs carry meaning for the users, and

3. Pragmatic function: all signs serve a useful purpose in the life of the users, from survival functions to influencing others’ behavior.

Some communication systems (including humans) also exhibit the following features:

4. Interchangeability: the ability of individuals within a species to both send and receive messages. One species that lacks this feature is the honeybee. Only a female “worker bee” can perform the dance that conveys to her hive-mates the location of a newly discovered food source. Another example is the mockingbird whose songs are performed only by the males to attract a mate and mark his territory.

5. Cultural transmission: the need for some aspects of the system to be learned through interaction with others, rather than being 100 percent innate or genetically programmed. The mockingbird learns its songs from other birds, or even from other sounds in its environment that appeal to it.

6. Arbitrariness: the form of a sign is not inherently or logically related to its meaning; signs are symbols. It could be said that the movements in the honeybees’ dance are arbitrary since anyone who is not a honeybee could not interpret their meaning.

Only true human language also has the following characteristics:

7. Discreteness: every human language is made up of a small number of meaningless discrete sounds. That is, the sounds can be isolated from each other, for purposes of study by linguists, or to be represented in a writing system.

8. Duality of patterning (two levels of combination): at the first level of patterning, these meaningless discrete sounds, called phonemes, are combined to form words and parts of words that carry meaning, or morphemes. In the second level of patterning, morphemes are recombined to form an infinite possible number of longer messages such as phrases and sentences according to a set of rules called syntax. It is this level of combination that is entirely lacking in the communication abilities of all other animals and makes human language an open system while all other animal systems are closed.

9. Displacement: the ability to communicate about things that are outside of the here and now made possible by the features of discreteness and duality of patterning. While other species are limited to communicating about their immediate time and place, we can talk about any time in the future or past, about any place in the universe, or even fictional places.

10. Productivity/creativity: the ability to produce and understand messages that have never been expressed before or to express new ideas. People do not speak according to prepared scripts, as if they were in a movie or a play; they create their utterances spontaneously, according to the rules of their language. It also makes possible the creation of new words and even the ability to lie.

A number of great apes, including gorillas, chimpanzees, bonobos and orangutans, have been taught human sign languages with all of the human design features. In each case, the apes have been able to communicate as humans do to an extent, but their linguistic abilities are reduced by the limited cognitive abilities that accompany their smaller brains.

UNIVERSALS OF LANGUAGE

Languages we do not speak or understand may sound like meaningless babble to us, but all the human languages that have ever been studied by linguists are amazingly similar. They all share a number of characteristics, which linguists call language universals. These language universals can be considered properties of the Universal Grammar that Chomsky proposed. Here is a list of some of the major ones.

- All human cultures have a human language and use it to communicate.

- All human languages change over time, a reflection of the fact that all cultures are also constantly changing.

- All languages are systematic, rule driven, and equally complex overall, and equally capable of expressing any idea that the speaker wishes to convey. There are no primitive languages.

- All languages are symbolic systems.

- All languages have a basic word order of elements, like subject, verb, and object, with variations.

- All languages have similar basic grammatical categories such as nouns and verbs.

- Every spoken language is made up of discrete sounds that can be categorized as vowels or consonants.

- The underlying structure of all languages is characterized by the feature duality of patterning, which permits any speaker to utter any message they need or wish to convey, and any speaker of the same language to understand the message.

DESCRIPTIVE LINGUISTICS: STRUCTURES OF LANGUAGE

The study of the structures of language is called descriptive linguistics. Descriptive linguists discover and describe the phonemes of a language, research called phonology. They study the lexicon (the vocabulary) of a language and how the morphemes are used to create new words, or morphology. They analyze the rules by which speakers create phrases and sentences, or the study of syntax. And they look at how these features all combine to convey meaning in certain social contexts, fields of study called semantics and pragmatics.

The Sounds of Language: Phonemes

A phoneme is defined as the minimal unit of sound that can make a difference in meaning if substituted for another sound in a word that is otherwise identical. The phoneme itself does not carry meaning. For example, in English if the sound we associate with the letter “p” is substituted for the sound of the letter “b” in the word bit, the word’s meaning is changed because now it is pit, a different word with an entirely different meaning. The human articulatory anatomy is capable of producing many hundreds of sounds, but no language has more than about 100 phonemes. English has about 36 or 37 phonemes, including about eleven vowels, depending on dialect. Hawaiian has only five vowels and about eight consonants. No two languages have the same exact set of phonemes.

Linguists use a written system called the International Phonetic Alphabet (IPA) to represent the sounds of a language. Unlike the letters of our alphabet that spell English words, each IPA symbol always represents only one sound no matter the language. For example, the letter “a” in English can represent the different vowel sounds in such words as cat, make, papa, law, etc., but the IPA symbol /a/ always and only represents the vowel sound of papa or pop.

The Units That Carry Meaning: Morphemes

A morpheme is a minimal unit of meaning in a language; a morpheme cannot be broken down into any smaller units that still relate to the original meaning. It may be a word that can stand alone, called an unbound morpheme (dog, happy, go, educate). Or it could be any part of a word that carries meaning that cannot stand alone but must be attached to another morpheme, bound morphemes. They may be placed at the beginning of the root word, such as un– (“not,” as in unhappy), or re– (“again,” as in rearrange). Or, they may follow the root, as in -ly (makes an adjective into an adverb: quickly from quick), -s (for plural, possessive, or a verb ending) in English. Some languages, like Chinese, have very few if any bound morphemes. Others, like Swahili have so many that nouns and verbs cannot stand alone as separate words; they must have one or more other bound morphemes attached to them.

The Structure of Phrases and Sentences: Syntax

Rules of syntax tell the speaker how to put morphemes together grammatically and meaningfully. There are two main types of syntactic rules: rules that govern word order, and rules that direct the use of certain morphemes that perform a grammatical function. For example, the order of words in the English sentence “The cat chased the dog” cannot be changed around or its meaning would change: “The dog chased the cat” (something entirely different) or “Dog cat the chased the” (something meaningless). English relies on word order much more than many other languages do because it has so few morphemes that can do the same type of work.

For example, in our sentence above, the phrase “the cat” must go first in the sentence, because that is how English indicates the subject of the sentence, the one that does the action of the verb. The phrase “the dog” must go after the verb, indicating that it is the dog that received the action of the verb, or is its object. Other syntactic rules tell us that we must put “the” before its noun, and “–ed” at the end of the verb to indicate past tense. In Russian, the same sentence has fewer restrictions on word order because it has bound morphemes that are attached to the nouns to indicate which one is the subject and which is the object of the verb. So the sentence koshka [chased] sobaku, which means “the cat chased the dog,” has the same meaning no matter how we order the words, because the –a on the end of koshka means the cat is the subject, and the –u on the end of sobaku means the dog is the object. If we switched the endings and said koshku [chased] sobaka, now it means the dog did the chasing, even though we haven’t changed the order of the words. Notice, too, that Russian does not have a word for “the.”

Conveying Meaning in Language: Semantics and Pragmatics

The whole purpose of language is to communicate meaning about the world around us so the study of meaning is of great interest to linguists and anthropologists alike. The field of semantics focuses on the study of the meanings of words and other morphemes as well as how the meanings of phrases and sentences derive from them. Recently linguists have been enjoying examining the multitude of meanings and uses of the word “like” among American youth, made famous through the film Valley Girl in 1983. Although it started as a feature of California English, it has spread all across the country, and even to many young second-language speakers of English. It’s, like, totally awesome dude!

The study of pragmatics looks at the social and cultural aspects of meaning and how the context of an interaction affects it. One aspect of pragmatics is the speech act. Any time we speak we are performing an act, but what we are actually trying to accomplish with that utterance may not be interpretable through the dictionary meanings of the words themselves. For example, if you are at the dinner table and say, “Can you pass the salt?” you are probably not asking if the other person is capable of giving you the salt. Often the more polite an utterance, the less direct it will be syntactically. For example, rather than using the imperative syntactic form and saying “Give me a cup of coffee,” it is considered more polite to use the question form and say “Would you please give me a cup of coffee?”

LANGUAGE VARIATION: SOCIOLINGUISTICS

Languages Versus Dialects

The number of languages spoken around the world is somewhat difficult to pin down, but we usually see a figure between 6,000 and 7,000. Why are they so hard to count? The term language is commonly used to refer to the idealized “standard” of a variety of speech with a name, such as English, Turkish, Swedish, Swahili, or Urdu. One language is usually considered to be incomprehensible to speakers of another one. The word dialect is often applied to a subordinate variety of a language and the common assumption is that we can understand someone who speaks another dialect of our own language.

These terms are not really very useful to describe actual language variation. For example, many of the hundreds of “dialects” spoken in China are very different from each other and are not mutually comprehensible to speakers of other Chinese “dialects.” The Chinese government promotes the idea that all of them are simply variants of the “Chinese language” because it helps to promote national solidarity and loyalty among Chinese people to their country and reduce regional factionalism. In contrast, the languages of Sweden, Denmark, and Norway are considered separate languages, but actually if a Swede, a Dane, and a Norwegian were to have a conversation together, each could use their own language and understand most of what the others say. Does this make them dialects or languages? The Serbian and Croatian languages are considered by their speakers to be separate languages due to distinct political and religious cultural identities. They even employ different writing systems to emphasize difference, but they are essentially the same and easily understandable to each other.

So in the words of linguist John McWhorter, actually “dialects is all there is.”[3] What he means by this is that a continuum of language variation is geographically distributed across populations in much the same way that human physical variation is, with the degree of difference between any two varieties increasing across increasing distances. This is the case even across national boundaries. Catalan, the language of northeastern Spain, is closer to the languages of southern France, Provençal and Occitan than any one is to its associated national language, Spanish or French. One language variety blends with the next geographically like the colors of the rainbow. However, the historical influence of colonizing states has affected that natural distribution. Thus, there is no natural “language” with variations called “dialects.” Usually one variety of a language is considered the “standard,” but this choice is based on the social and political prestige of the group that speaks that variety; it has no inherent superiority over the other variants called its “dialects.” The way people speak is an indicator of who they are, where they come from, and what social groups they identify with, as well as what particular situation they find themselves in, and what they want to accomplish with a specific interaction.

How Does Language Variation Develop?

Why do people from different regions in the United States speak so differently? Why do they speak differently from the people of England? A number of factors have influenced the development of English dialects, and they are typical causes of dialect variation in other languages as well.

Settlement patterns: The first English settlers to North America brought their own dialects with them. Settlers from different parts of the British Isles spoke different dialects (they still do), and they tended to cluster together in their new homeland. The present-day dialects typical of people in various areas of the United States, such as New England, Virginia, New Jersey, and Delaware, still reflect these original settlement sites, although they certainly have changed from their original forms.

Migration routes: After they first settled in the United States, some people migrated further west, establishing dialect boundaries as they traveled and settled in new places.

Geographical factors: Rivers, mountains, lakes and islands affected migration routes and settlement locations, as well as the relative isolation of the settlements. People in the Appalachian mountains and on certain islands off the Atlantic coast were relatively isolated from other speakers for many years and still speak dialects that sound very archaic compared with the mainstream.

Language contact: Interactions with other language groups, such as Native Americans, French, Spanish, Germans, and African-Americans, along paths of migration and settlement resulted in mutual borrowing of vocabulary, pronunciation, and some syntax.

Have you ever heard of “Spanglish”? It is a form of Spanish spoken near the borders of the United States that is characterized by a number of words adopted from English and incorporated into the phonological, morphological and syntactic systems of Spanish. For example, the Spanish sentence Voy a estationar mi camioneta, or “I’m going to park my truck” becomes in Spanglish Voy a parquear mi troca. Many other languages have such English-flavored versions, including Franglais and Chinglish. Some countries, especially France, actively try to prevent the incursion of other languages (especially English) into their language, but the effort is always futile. People will use whatever words serve their purposes, even when the “language police” disapprove. Some Franglais words that have invaded in spite of the authorities protestations include the recently acquired binge-drinking, beach, e-book, and drop-out, while older ones include le weekend and stop.

Region and occupation: Rural farming people may continue to use archaic expressions compared with urban people, who have much more contact with contemporary life styles and diverse speech communities.

Social class: Social status differences cut across all regional variations of English. These differences reflect the education and income level of speakers.

Group reference: Other categories of group identity, including ethnicity, national origin of ancestors, age, and gender can be symbolized by the way we speak, indicating in-group versus out-group identity. We talk like other members of our groups, however we define that group, as a means of maintaining social solidarity with other group members. This can include occupational or interest-group jargon, such as medical or computer terms, or surfer talk, as well as pronunciation and syntactic variations. Failure to make linguistic accommodation to those we are speaking to may be interpreted as a kind of symbolic group rejection even if that dialect might be relatively stigmatized as a marker of a disrespected minority group. Most people are able to use more than one style of speech, also called register, so that they can adjust depending on who they are interacting with: their family and friends, their boss, a teacher, or other members of the community.

Linguistic processes: New developments that promote the simplification of pronunciation or syntactic changes to clarify meaning can also contribute to language change.

These factors do not work in isolation. Any language variation is the result of a number of social, historical, and linguistic factors that might affect individual performances collectively and therefore dialect change in a particular speech community is a process that is continual.

Try This: Which of these terms do you use, pop versus soda versus coke? Pail versus bucket? Do you say “vayse” or “vahze” for the vessel you put flowers in? Where are you from? Can you find out where each term or pronunciation is typically used? Can you find other regional differences like these?

What Is a “Standard” Variety of a Language?

The standard of any language is simply one of many variants that has been given special prestige in the community because it is spoken by the people who have the greatest amount of prestige, power, and (usually) wealth. In the case of English its development has been in part the result of the invention of the printing press in the sixteenth-century and the subsequent increase in printed versions of the language. This then stimulated more than a hundred years of deliberate efforts by grammarians to standardize spelling and grammatical rules. Their decisions invariably favored the dialect spoken by the aristocracy. Some of their other decisions were rather arbitrarily determined by standards more appropriate to Latin, or even mathematics. For example, as it is in many other languages, it was typical among the common people of the time (and it still is among the present-day working classes and in casual speech), to use multiple negative particles in a sentence, like “I don’t have no money.” Those eighteenth-century grammarians said we must use either don’t or no, but not both, that is, “I don’t have any money” or “I have no money.” They based this on a mathematical rule that says that two negatives make a positive. (When multiplying two signed negative numbers, such as -5 times -2, the result is 10.) These grammarians claimed that if we used the double negative, we would really be saying the positive, or “I have money.” Obviously, anyone who utters that double-negative sentence is not trying to say that they have money, but the rule still applies for standard English to this day.

Non-standard varieties of English, also known as vernaculars, are usually distinguished from the standard by their inclusion of such stigmatized forms as multiple negatives, the use of the verb form ain’t (which was originally the normal contraction of am not, as in “I ain’t,” comparable to “you aren’t,” or “she isn’t”); pronunciation of words like this and that as dis and dat; pronunciation of final “–ing” as “–in;” and any other feature that grammarians have decreed as “improper” English.

The standard of any language is a rather artificial, idealized form of language, the language of education. One must learn its rules in school because it is not anyone’s true first language. Everyone speaks a dialect, although some dialects are closer to the standard than others. Those that are regarded with the least prestige and respect in society are associated with the groups of people who have the least amount of social prestige. People with the highest levels of education have greater access to the standard, but even they usually revert to their first dialect as the appropriate register in the context of an informal situation with friends and family. In other words, no language variety is inherently better or worse than any other one. It is due to social attitudes that people label some varieties as “better” or “proper,” and others as “incorrect” or “bad.” Recall Language Universal 3: “All languages are systematic, rule driven, and equally complex overall, and equally capable of expressing any idea that the speaker wishes to convey.”

In 1972 sociolinguist William Labov did an interesting study in which he looked at the pronunciation of the sound /r/ in the speech of New Yorkers in two different department stores. Many people from that area drop the /r/ sound in words like fourth and floor (fawth, floah), but this pronunciation is primarily associated with lower social classes and is not a feature of the approved standard for English, even in New York City. In two different contexts, an upscale store and a discount store, Labov asked customers what floor a certain item could be found on, already knowing it was the fourth floor. He then asked them to repeat their answer, as though he hadn’t heard it correctly. He compared the first with the second answers by the same person, and he compared the answers in the expensive store versus the cheaper store. He found 1) that the responders in the two stores differed overall in their pronunciation of this sound, and 2) that the same person may differ between situations of less and more self-consciousness (first versus second answer). That is, people in the upscale store tended to pronounce the /r/, and responders in both stores tended to produce the standard pronunciation more in their second answers in an effort to sound “higher class.” These results showed that the pronunciation or deletion of /r/ in New York correlates with both social status and context.[4]

There is nothing inherently better or worse in either pronunciation; it depends entirely on the social norms of the community. The same /r/ deletion that is stigmatized in New York City is the prestigious, standard form in England, used by the upper class and announcers for the BBC. The pronunciation of the /r/ sound in England is stigmatized because it is used by lower-status people in some industrial cities.

It is important to note that almost everyone has access to a number of different language variations and registers. They know that one variety is appropriate to use with some people in some situations, and others should be used with other people or in other situations. The use of several language varieties in a particular interaction is known as code-switching.

Try This: To understand the importance of using the appropriate register in a given context, the next time you are with a close friend or family member try using the register, or style of speech, that you might use with your professor or a respected member of the clergy. What is your friend’s reaction? I do not recommend trying the reverse experiment, using a casual vernacular register with such a respected person (unless they are also a close friend). Why not?

Linguistic Relativity: The Whorf Hypothesis

In the 1920s, Benjamin Whorf was a graduate student studying with linguist Edward Sapir at Yale University in New Haven, Connecticut. Sapir, considered the father of American linguistic anthropology, was responsible for documenting and recording the languages and cultures of many Native American tribes, which were disappearing at an alarming rate. This was due primarily to the deliberate efforts of the United States government to force Native Americans to assimilate into the Euro-American culture. Sapir and his predecessors were well aware of the close relationship between culture and language because each culture is reflected in and influences its language. Anthropologists need to learn the language of the culture they are studying in order to understand the world view of its speakers. Whorf believed that the reverse is also true, that a language affects culture as well, by actually influencing how its speakers think. His hypothesis proposes that the words and the structures of a language influence how its speakers think about the world, how they behave, and ultimately the culture itself. (See our definition of culture above.) Simply stated, Whorf believed that human beings see the world the way they do because the specific languages they speak influence them to do so. He developed this idea through both his work with Sapir and his work as a chemical engineer for the Hartford Insurance Company investigating the causes of fires.

One of his cases while working for the insurance company was a fire at a business where there were a number of gasoline drums. Those that contained gasoline were surrounded by signs warning employees to be cautious around them and to avoid smoking near them. The workers were always careful around those drums. On the other hand, empty gasoline drums were stored in another area, but employees were more careless there. Someone tossed a cigarette or lighted match into one of the “empty” drums, it went up in flames, and started a fire that burned the business to the ground. Whorf theorized that the meaning of the word empty implied to the worker that “nothing” was there to be cautious about so the worker behaved accordingly. Unfortunately, an “empty” gasoline drum may still contain fumes, which are more flammable than the liquid itself.

Whorf’s studies at Yale involved working with Native American languages, including Hopi. The Hopi language is quite different from English, in many ways. For example, let’s look at how the Hopi language deals with time. Western languages (and cultures) view time as a flowing river in which we are being carried continuously away from a past, through the present, and into a future. Our verb systems reflect that concept with specific tenses for past, present, and future. We think of this concept of time as universal, that all humans see it the same way. A Hopi speaker has very different ideas and the structure of their language both reflects and shapes the way they think about time. The Hopi language has no present, past, or future tense. Instead, it divides the world into what Whorf called the manifested and unmanifest domains. The manifested domain deals with the physical universe, including the present, the immediate past and future; the verb system uses the same basic structure for all of them. The unmanifest domain involves the remote past and the future, as well as the world of desires, thought, and life forces. The set of verb forms dealing with this domain are consistent for all of these areas, and are different from the manifested ones. Also, there are no words for hours, minutes, or days of the week.

Native Hopi speakers often had great difficulty adapting to life in the English speaking world when it came to being “on time” for work or other events. It is simply not how they had been conditioned to behave with respect to time in their Hopi world, which followed the phases of the moon and the movements of the sun. In a book about the Abenaki who lived in Vermont in the mid-1800s, Trudy Ann Parker described their concept of time, which very much resembled that of the Hopi and many of the other Native American tribes. “They called one full day a sleep, and a year was called a winter. Each month was referred to as a moon and always began with a new moon. An Indian day wasn’t divided into minutes or hours. It had four time periods—sunrise, noon, sunset, and midnight. Each season was determined by the budding or leafing of plants, the spawning of fish or the rutting time for animals. Most Indians thought the white race had been running around like scared rabbits ever since the invention of the clock.”[5]

The lexicon, or vocabulary, of a language is an inventory of the items a culture talks about and has categorized in order to make sense of the world and deal with it effectively. For example, modern life is dictated for many by the need to travel by some kind of vehicle—cars, trucks, SUVs, trains, buses, etc. We therefore have thousands of words to talk about them, including types of vehicles, models, brands, or parts.

The most important aspects of each culture are similarly reflected in the lexicon of its language. Among the societies living in the islands of Oceania in the Pacific, fish have great economic and cultural importance. This is reflected in the rich vocabulary that describes all aspects of the fish and the environments that islanders depend on for survival. For example, in Palau there are about 1,000 fish species and Palauan fishermen knew, long before biologists existed, details about the anatomy, behavior, growth patterns and habitat of most of them—in many cases far more than modern biologists know even today. Much of fish behavior is related to the tides and the phases of the moon. Throughout Oceania, the names given to certain days of the lunar months reflect the likelihood of successful fishing. For example, in the Caroline Islands, the name for the night before the new moon is otolol, which means “to swarm.” The name indicates that the best fishing days cluster around the new moon. In Hawai`i and Tahiti two sets of days have names containing the particle `ole or `ore; one occurs in the first quarter of the moon and the other in the third quarter. The same name is given to the prevailing wind during those phases. The words mean “nothing,” because those days were considered bad for fishing as well as planting.

Parts of Whorf’s hypothesis, known as linguistic relativity, were controversial from the beginning, and still are among some linguists. Yet Whorf’s ideas now form the basis for an entire sub-field of cultural anthropology: cognitive or psychological anthropology. A number of studies have been done that support Whorf’s ideas. Linguist George Lakoff’s work looks at the pervasive existence of metaphors in everyday speech that can be said to predispose a speaker’s world view and attitudes on a variety of human experiences.[6]

A metaphor is an expression in which one kind of thing is understood and experienced in terms of another entirely unrelated thing; the metaphors in a language can reveal aspects of the culture of its speakers. Take, for example, the concept of an argument. In logic and philosophy, an argument is a discussion involving differing points of view, or a debate. But the conceptual metaphor in American culture can be stated as ARGUMENT IS WAR. This metaphor is reflected in many expressions of the everyday language of American speakers: I won the argument. He shot down every point I made. They attacked every argument we made. Your point is right on target. I had a fight with my boyfriend last night. In other words, we use words appropriate for discussing war when we talk about arguments, which are certainly not real war. But we actually think of arguments as a verbal battle that often involve anger, and even violence, which then structures how we argue.

To illustrate that this concept of argument is not universal, Lakoff suggests imagining a culture where an argument is not something to be won or lost, with no strategies for attacking or defending, but rather as a dance where the dancers’ goal is to perform in an artful, pleasing way. No anger or violence would occur or even be relevant to speakers of this language, because the metaphor for that culture would be ARGUMENT IS DANCE.

LANGUAGE IN ITS SOCIAL SETTINGS: LANGUAGE AND IDENTITY

The way we speak can be seen as a marker of who we are and with whom we identify. We talk like the other people around us, where we live, our social class, our region of the country, our ethnicity, and even our gender. These categories are not homogeneous. All New Yorkers do not talk exactly the same; all women do not speak according to stereotypes: all African-Americans do not speak an African-American dialect. No one speaks the same way in all situations and contexts, but there are some consistencies in speaking styles that are associated with many of these categories.

Social Class

As discussed above, people can indicate social class by the way they speak. The closer to the standard version their dialect is, the more they are seen as a member of a higher social class because the dialect reflects a higher level of education. In American culture, social class is defined primarily by income and net worth, and it is difficult (but not impossible) to acquire wealth without a high level of education. However, the speech of people in the higher social classes also varies with the region of the country where they live, because there is no single standard of American English, especially with respect to pronunciation. An educated Texan will sound different from an educated Bostonian, but they will use the standard version of English from their own region. The lower the social class of a community, the more their language variety will differ from both the standard and from the vernaculars of other regions.

Ethnicity

An ethnicity, or ethnic group, is a group of people who identify with each other based on some combination of shared cultural heritage, ancestry, history, country of origin, language, or dialect.In the United States such groups are frequently referred to as “races,” but there is no such thing as biological race, and this misconception has historically led to racism and discrimination. Because of the social implications and biological inaccuracy of the term “race,” it is often more accurate and appropriate to use the terms ethnicity or ethnic group. A language variety is often associated with an ethnic group when its members use language as a marker of solidarity. They may also use it to distinguish themselves from a larger, sometimes oppressive, language group when they are a minority population.

A familiar example of an oppressed ethnic group with a distinctive dialect is African-Americans. They have a unique history among minorities in the United States, with their centuries-long experience as captive slaves and subsequent decades under Jim Crow laws. (These laws restricted their rights after their emancipation from slavery.) With the Civil Rights Acts of 1964 and 1968 and other laws, African-Americans gained legal rights to access public places and housing, but it is not possible to eliminate racism and discrimination only by passing laws; both still exist among the white majority. It is no longer “politically correct” to openly express racism, but it is much less frowned upon to express negative attitudes about African-American Vernacular English (AAVE). Typically, it is not the language itself that these attitudes are targeting; it is the people who speak it.

As with any language variety, AAVE is a complex, rule-driven, grammatically consistent language variety, a dialect of American English with a distinctive history. A widely accepted hypothesis of the origins of AAVE is as follows. When Africans were captured and brought to the Americas, they brought their own languages with them. But some of them already spoke a version of English called a pidgin. A pidgin is a language that springs up out of a situation in which people who do not share a language must spend extended amounts of time together, usually in a working environment. Pidgins are the only exception to the Language Universal number 3 (all languages are systematic, rule driven, and equally complex overall, and equally capable of expressing any idea that the speaker wishes to convey).

There are no primitive languages, but a pidgin is a simplified language form, cobbled together based mainly on one core language, in this case English, using a small number of phonemes, simplified syntactic rules, and a minimal lexicon of words borrowed from the other languages involved. A pidgin has no native speakers; it is used primarily in the environment in which it was created. An English-based pidgin was used as a common language in many areas of West Africa by traders interacting with people of numerous language groups up and down the major rivers. Some of the captive Africans could speak this pidgin, and it spread among them after the slaves arrived in North America and were exposed daily to English speakers. Eventually, the use of the pidgin expanded to the point that it developed into the original forms of what has been called a Black English plantation creole. A creole is a language that develops from a pidgin when it becomes so widely used that children acquire it as one of their first languages. In this situation it becomes a more fully complex language consistent with Universal number 3.

All African-Americans do not speak AAVE, and people other than African-Americans also speak it. Anyone who grows up in an area where their friends speak it may be a speaker of AAVE like the rapper Eminem, a white man who grew up in an African-American neighborhood in Detroit. Present-day AAVE is not homogeneous; there are many regional and class variations. Most variations have several features in common, for instance, two phonological features: the dropped /r/ typical of some New York dialects, and the pronunciation of the “th” sound of words like this and that as a /d/ sound, dis and dat. Most of the features of AAVE are also present in many other English dialects, but those dialects are not as severely stigmatized as AAVE is. It is interesting, but not surprising, that AAVE and southern dialects of white English share many features. During the centuries of slavery in the south, African-American slaves outnumbered whites on most plantations. Which group do you think had the most influence on the other group’s speech? The African-American community itself is divided about the acceptability of AAVE. It is probably because of the historical oppression of African-Americans as a group that the dialect has survived to this day, in resistance to the majority white society’s disapproval.

Language and Gender

In any culture that has differences in gender role expectations—and all cultures do—there are differences in how people talk based on their sex and gender identity. These differences have nothing to do with biology. Children are taught from birth how to behave appropriately as a male or a female in their culture, and different cultures have different standards of behavior. It must be noted that not all men and women in a society meet these standards, but when they do not they may pay a social price. Some societies are fairly tolerant of violations of their standards of gendered behavior, but others are less so.

In the United States, men are generally expected to speak in a low, rather monotone pitch; it is seen as masculine. If they do not sound sufficiently masculine, American men are likely to be negatively labeled as effeminate. Women, on the other hand, are freer to use their entire pitch range, which they often do when expressing emotion, especially excitement. When a woman is a television news announcer, she will modulate the pitch of her voice to a sound more typical of a man in order to be perceived as more credible. Women tend to use minimal responses in a conversation more than men. These are the vocal indications that one is listening to a speaker, such as m-hm, yeah, I see, wow, and so forth. They tend to face their conversation partners more and use more eye contact than men. This is one reason women often complain that men do not listen to them.

Deborah Tannen, a professor of linguistics at Georgetown University in Washington, D.C., has done research for many years on language and gender. Her basic finding is that in conversation women tend to use styles that are relatively cooperative, to emphasize an equal relationship, while men seem to talk in a more competitive way in order to establish their positions in a hierarchy. She emphasizes that both men and women may be cooperative and competitive in different ways.[7]

Other societies have very different standards for gendered speech styles. In Madagascar, men use a very flowery style of talk, using proverbs, metaphors and riddles to indirectly make a point and to avoid direct confrontation. The women on the other hand speak bluntly and say directly what is on their minds. Both admire men’s speech and think of women’s speech as inferior. When a man wants to convey a negative message to someone, he will ask his wife to do it for him. In addition, women control the marketplaces where tourists bargain for prices because it is impossible to bargain with a man who will not speak directly. It is for this reason that Malagasy women are relatively independent economically.

In Japan, women were traditionally expected to be subservient to men and speak using a “feminine” style, appropriate for their position as wife and mother, but the Japanese culture has been changing in recent decades so more and more women are joining the work force and achieving positions of relative power. Such women must find ways of speaking to maintain their feminine identities and at the same time express their authority in interactions with men, a challenging balancing act. Women in the United States do as well, to a certain extent. Even Margaret Thatcher, prime minister of England, took speech therapy lessons to “feminize” her language use while maintaining an expression of authority.

The Deaf Culture and Signed Languages

Deaf people constitute a linguistic minority in many societies worldwide based on their common experience of life. This often results in their identification with a local Deaf culture. Such a culture may include shared beliefs, attitudes, values, norms, and values, like any other culture, and it is invariably marked by communication through the use of a sign language. It is not enough to be physically deaf (spelled with a lower case “d”) to belong to a Deaf culture (written with a capital “D”). In fact, one does not even need to be deaf. Identification with a Deaf culture is a personal choice. It can include family members of deaf people or anyone else who associates with deaf people, as long as the community accepts them. Especially important, members of Deaf culture are expected to be competent communicators in the sign language of the culture. In fact, there have been profoundly deaf people who were not accepted into the local Deaf community because they could not sign. In some deaf schools, at least in the United States, the practice has been to teach deaf children how to lip read and speak orally, and to prevent them from using a signed system. They were expected to blend in with the hearing community as much as possible. This is called the oralist approach to education, but it is considered by members of the Deaf community to be a threat to the existence of their culture. For the same reason, the development of cochlear implants, which can restore hearing for some deaf children, has been controversial in U.S. Deaf communities. The members often have a positive attitude toward their deafness and do not consider it to be a disability. To them, regaining hearing represents disloyalty to the group and a desire to leave it.

According to the World Federation of the Deaf, there are over 200 distinct sign languages in the world, which are not mutually comprehensible. They are all considered by linguists to be true languages, consistent with linguistic definitions of all human languages. They differ only in the fact that they are based on a gestural-visual rather than a vocal-auditory sensory mode. Each is a true language with basic units comparable to phonemes but composed of hand positions, shapes, and movements, plus some facial expressions. Each has its own unique set of morphemes and grammatical rules. American Sign Language (ASL), too, is a true language separate from English; it is not English on the hands. Like all other signed languages, it is possible to sign with a word-for-word translation from English, using finger spelling for some words, which is helpful in teaching the deaf to read, but they prefer their own language, ASL, for ordinary interactions. Of course, Deaf culture identity intersects with other kinds of cultural identity, like nationality, ethnicity, gender, class, and sexual orientation, so each Deaf culture is not only small but very diverse.

LANGUAGE CHANGE: HISTORICAL LINGUISTICS

Recall the language universal stating that all languages change over time. In fact, it is not possible to keep them from doing so. How and why does this happen? The study of how languages change is known as historical linguistics. The processes, both historical and linguistic, that cause language change can affect all of its systems: phonological, morphological, lexical, syntactic, and semantic.

Historical linguists have placed most of the languages of the world into taxonomies, groups of languages classified together based on words that have the same or similar meanings. Language taxonomies create something like a family tree of languages. For example, words in the Romance family of languages, called sister languages, show great similarities to each other because they have all derived from the same “mother” language, Latin (the language of Rome). In turn, Latin is considered a “sister” language to Sanskrit (once spoken in India and now the mother language of many of India’s modern languages, and still the language of the Hindu religion) and classical Greek. Their “mother” language is called “Indo-European,” which is also the mother (or grandmother!) language of almost all the rest of European languages.

Let’s briefly examine the history of the English language as an example of these processes of change. England was originally populated by Celtic peoples, the ancestors of today’s Irish, Scots, and Welsh. The Romans invaded the islands in the first-century AD, bringing their Latin language with them. This was the edge of their empire; their presence there was not as strong as it was on the European mainland. When the Roman Empire was defeated in about 500 AD by Germanic speaking tribes from northern Europe (the “barbarians”), a number of those related Germanic languages came to be spoken in various parts of what would become England. These included the languages of the Angles and the Saxons, whose names form the origin of the term Anglo-Saxon and of the name of England itself—Angle-land. At this point, the languages spoken in England included those Germanic languages, which gradually merged as various dialects of English, with a small influence from the Celtic languages, some Latin from the Romans, and a large influence from Viking invaders. This form of English, generally referred to as Old English, lasted for about 500 years. In 1066 AD, England was invaded by William the Conqueror from Normandy, France. New French rulers brought the French language. French is a Latin-based language, and it is by far the greatest source of the Latin-based words in English today; almost 10,000 French words were adopted into the English of the time period. This was the beginning of Middle English, which lasted another 500 years or so.

The change to Modern English had two main causes. One was the invention of the printing press in the fifteenth-century, which resulted in a deliberate effort to standardize the various dialects of English, mostly in favor of the dialect spoken by the elite. The other source of change, during the fifteenth and sixteenth-centuries, was a major shift in the pronunciation of many of the vowels. Middle English words like hus and ut came to be pronounced house and out. Many other vowel sounds also changed in a similar manner.

None of the early forms of English are easily recognizable as English to modern speakers. Here is an example of the first two lines of the Lord’s Prayer in Old English, from 995 AD, before the Norman Invasion:

Fæder ūre, ðū ðē eart on heofonum,

Sī ðīn nama gehālgod.

Here are the same two lines in Middle English, English spoken from 1066 AD until about 1500 AD. These are taken from the Wycliffe Bible in 1389 AD:

Our fadir that art in heuenes,

halwid be thi name. [8]

The following late Middle English/early Modern English version from the 1526 AD Tyndale Bible, shows some of the results of grammarians’ efforts to standardize spelling and vocabulary for wider distribution of the printed word due to the invention of the printing press:

O oure father which arte in heven,

halowed be thy name.

And finally, this example is from the King James Version of the Bible, 1611 AD, in the early Modern English language of Shakespeare. It is almost the same archaic form that modern Christians use.

Our father which art in heauen,

hallowed be thy name.

Over the centuries since the beginning of Modern English, it has been further affected by exposure to other languages and dialects worldwide. This exposure brought about new words and changed meanings of old words. More changes to the sound systems resulted from phonological processes that may or may not be attributable to the influence of other languages. Many other changes, especially in recent decades, have been brought about by cultural and technological changes that require new vocabulary to deal with them.

Try This: Just think of all the words we use today that have either changed their primary meanings, or are completely new: mouse and mouse pad, google, app, computer (which used to be a person who computes!), texting, cool, cell, gay. How many more can you think of?

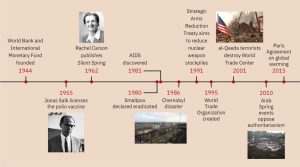

GLOBALIZATION AND LANGUAGE

Globalization is the spread of people, their cultures and languages, products, money, ideas, and information around the world. Globalization is nothing new; it has been happening throughout the existence of humans, but for the last 500 years it has been increasing in its scope and pace, primarily due to improvements in transportation and communication. Beginning in the fifteenth-century, English explorers started spreading their language to colonies in all parts of the world. English is now one of the three or four most widely spoken languages. It has official status in at least 60 countries, and it is widely spoken in many others. Other colonizers also spread their languages, especially Spanish, French, Portuguese, Arabic, and Russian. Like English, each has its regional variants. One effect of colonization has often been the suppression of local languages in favor of the language of the more powerful colonizers.

In the past half century, globalization has been dominated by the spread of North American popular culture and language to other countries. Today it is difficult to find a country that does not have American music, movies and television programs, or Coca Cola and McDonald’s, or many other artifacts of life in the United States, and the English terms that go with them.

In addition, people are moving from rural areas to cities in their own countries, or they are migrating to other countries in unprecedented numbers. Many have moved because they are refugees fleeing violence, or they found it increasingly difficult to survive economically in their own countries. This mass movement of people has led to the on-going extinction of large numbers of the world’s languages as people abandon their home regions and language in order to assimilate into their new homes.

Language Shift, Language Maintenance, and Language Death

Of the approximately 6,000 languages still surviving today, about half the world’s more than seven billion people speak only ten. These include Mandarin Chinese, two languages from India, Spanish, English, Arabic, Portuguese, Russian, Japanese, and German. Many of the rest of the world’s languages are spoken by a few thousand people, or even just a few hundred, and most of them are threatened with extinction, called language death. It has been predicted that by the end of this century up to 90 percent of the languages spoken today will be gone. The rapid disappearance of so many languages is of great concern to linguists and anthropologists alike. When a language is lost, its associated culture and unique set of knowledge and worldview are lost with it forever. Remember Whorf’s hypothesis. An interesting website shows short videos of the last speakers of several endangered languages, including one speaking an African “click language.”

Some minority languages are not threatened with extinction, even those that are spoken by a relatively small number of people. Others, spoken by many thousands, may be doomed. What determines which survive and which do not? Smaller languages that are associated with a specific country are likely to survive. Others that are spoken across many national boundaries are also less threatened, such as Quechua, an indigenous language spoken throughout much of South America, including Colombia, Ecuador, Peru, Chile, Bolivia, and Argentina. The great majority of the world’s languages are spoken by people with minority status in their countries. After all, there are only about 193 countries in the world, and over 6,000 languages are spoken in them. You can do the math.

The survival of the language of a given speech community is ultimately based on the accumulation of individual decisions by its speakers to continue using it or to abandon it. The abandonment of a language in favor of a new one is called language shift. These decisions are usually influenced by the society’s prevailing attitudes. In the case of a minority speech community that is surrounded by a more powerful majority, an individual might keep or abandon the native language depending on a complex array of factors. The most important factors will be the attitudes of the minority people toward themselves and their language, and the attitude of the majority toward the minority.

Language represents a marker of identity, an emblem of group membership and solidarity, but that marker may have a downside as well. If the majority look down on the minority as inferior in some way and discriminates against them, some members of the minority group may internalize that attitude and try to blend in with the majority by adopting the majority’s culture and language. Others might more highly value their identity as a member of that stigmatized group, in spite of the discrimination by the majority, and continue to speak their language as a symbol of resistance against the more powerful group. One language that is a minority language when spoken in the United States and that shows no sign of dying out either there or in the world at large, is Spanish. It is the primary language in many countries and in the United States it is by far the largest minority language.

A former student of mine, James Kim (pictured in Figure 3 as a child with his brother), illustrates some of the common dilemmas a child of immigrants might go through as he loses his first language. Although he was born in California, he spoke only Korean for the first six years of his life. Then he went to school, where he was the only Korean child in his class. He quickly learned English, the language of instruction and the language of his classmates. Under peer pressure, he began refusing to speak Korean, even to his parents, who spoke little English. His parents tried to encourage him to keep his Korean language and culture by sending him to Korean school on Saturdays, but soon he refused to attend. As a college student, James began to regret the loss of the language of his parents, not to mention his relationship with them. He tried to take a college class in Korean, but it was too difficult and time consuming. After consulting with me, he created a six-minute radio piece, called “First Language Attrition: Why My Parents and I Don’t Speak the Same Language,” while he was an intern at a National Public Radio station. He interviewed his parents in the piece and was embarrassed to realize he needed an interpreter.[9] Since that time, he has started taking Korean lessons again, and he took his first trip to Korea with his family during the summer of 2014. He was very excited about the prospect of reconnecting with his culture, with his first language, and especially with his parents.

The Korean language as a whole is in no danger of extinction, but many Korean speaking communities of immigrants in the United States, like other minority language groups in many countries, are having difficulty maintaining their language and culture. Those who are the most successful live in large, geographically coherent neighborhoods; they maintain closer ties to their homeland by frequent visits, telephone, and email contact with relatives. There may also be a steady stream of new immigrants from the home country. This is the case with most Spanish speaking communities in the United States, but it is less so with the Korean community.[10]

Another example of an oppressed minority group that has struggled with language and culture loss is Native Americans. Many were completely wiped out by the European colonizers, some by deliberate genocide but the great majority (up to 90 percent) by the diseases that the white explorers brought with them, against which the Native Americans had no immunity. In the twentieth-century, the American government stopped trying to kill Native Americans but instead tried to assimilate them into the white majority culture. It did this in part by forcing Native American children to go to boarding schools where they were required to cut their hair, practice Christianity, and speak only English. When they were allowed to go back home years later, they had lost their languages and their culture, but had not become culturally “white” either. The status of Native Americans in the nineteenth and twentieth-centuries as a scorned minority prompted many to hide their ethnic identities even from their own children. In this way, the many hundreds of original Native American languages in the United States have dwindled to less than 140 spoken today, according to UNESCO. More than half of those could disappear in the next few years, since many are spoken by only a handful of older members of their tribes. However, a number of Native American tribes have recently been making efforts to revive their languages and cultures, with the help of linguists and often by using texts and old recordings made by early linguists like Edward Sapir.

Revitalization of Indigenous Languages

A fascinating example of a tribal language revitalization program is that of the Wampanoag tribe in Massachusetts. The Wampanoag were the Native Americans who met the Puritans when they landed at Plymouth Rock, helped them survive the first winter, and who were with them at the first Thanksgiving. The contemporary descendants of that historic tribe still live in Massachusetts, but bringing back their language was not something Wampanoag people had ever thought possible because no one had spoken it for more than a century.

A young Wampanoag woman named Jessie Little Doe Baird (pictured in Figure 4 with her daughter Mae) was inspired by a series of dreams in which her ancestors spoke to her in their language, which she of course did not understand. She eventually earned a master’s degree in Algonquian linguistics at Massachusetts Institute of Technology in Boston and launched a project to bring her language back from the dead. This process was made possible by the existence of a large collection of documents, including copies of the King James Bible, written phonetically in Wampanoag during the seventeenth and eighteenth-centuries. She also worked with speakers of languages related to the Algonquian family to help in the reconstruction of the language. The community has established a school to teach the language to the children and promote its use among the entire community. Her daughter Mae is among the first new native speakers of Wampanoag.[11]

How Is the Digital Age Changing Communication?

The invention of the printing press in the fifteenth-century was just the beginning of technological transformations that made the spread of information in European languages and ideas possible across time and space using the printed word. Recent advances in travel and digital technology are rapidly transforming communication; now we can be in contact with almost anyone, anywhere, in seconds. However, it could be said that the new age of instantaneous access to everything and everyone is actually continuing a social divide that started with the printing press.

In the fifteenth-century, few people could read and write, so only the tiny educated minority were in a position to benefit from printing. Today, only those who have computers and the skills to use them, the educated and relatively wealthy, have access to this brave new world of communication. Some schools have adopted computers and tablets for their students, but these schools are more often found in wealthier neighborhoods. Thus, technology is continuing to contribute to the growing gap between the economic haves and the have-nots.

There is also a digital generation gap between the young, who have grown up with computers, and the older generations, who have had to learn to use computers as adults. These two generations have been referred to as digital natives and digital immigrants.[12] The difference between the two groups can be compared to that of children versus adults learning a new language; learning is accomplished much more easily by the young.

Computers, and especially social media, have made it possible for millions of people to connect with each other for purposes of political activism, including “Occupy Wall Street” in the United States and the “Arab Spring” in the Middle East. Some anthropologists have introduced computers and cell phones to the people they studied in remote areas, and in this way they were able to stay in contact after finishing their ethnographic work. Those people, in turn, were now able to have greater access to the outside world.